48 min read

Table of Contents

- The Shift from Correlation to Causality in Marketing Measurement

- Google's Measurement Ecosystem

- Meta's Approach to Proving Value

- Mobile Measurement Partner (MMP) Incrementality Solutions

- Comparative Analysis and Strategic Framework

- The Role of Mobile DSPs and Ad Networks

- The Definitive Comparison Table

- Choosing Your Measurement Approach: A Decision Framework

- Reference

1. The Shift from Correlation to Causality in Marketing Measurement

Beyond Attribution: The Fundamental Flaw of Correlation

For decades, digital marketing measurement has been dominated by attribution modeling, a practice that seeks to assign credit for a conversion to the various marketing touchpoints a user encounters. Whether through simple last-touch models that credit the final interaction or complex multi-touch attribution (MTA) models that distribute credit across the entire customer journey, the core function has remained the same: to understand which channels are present on the path to purchase.

However, this entire paradigm is built on a foundation of correlation, not causation. Attribution can reveal that a user clicked a search ad before buying a product, but it cannot answer the critical counterfactual question that lies at the heart of marketing effectiveness:

"Would this conversion have happened anyway?"

This distinction is not merely academic; it has profound implications for budget allocation and strategic planning. An attribution model might credit a branded search campaign for a sale from a loyal customer who was already navigating to the website to purchase, thereby overstating the campaign's true value.

This is the fundamental flaw of correlational analysis: it identifies channels that are involved in conversions, not necessarily those that cause them. In a world of increasing budget scrutiny, this distinction is paramount. The industry is therefore undergoing a seismic shift from asking "Which channels get credit?" to "Which channels create value?". This is the shift from attribution to incrementality.

The imperative to adopt a causal measurement framework has been dramatically accelerated by systemic changes to the digital advertising ecosystem. The very infrastructure that powered user-level attribution models—stable, cross-platform identifiers like third-party cookies and mobile ad IDs (IDFA)—is being dismantled by privacy-driven initiatives from platform owners like Apple and Google. Without the ability to reliably stitch together a user's journey across different websites and apps, the accuracy of traditional attribution models degrades significantly.

As a result, marketers are being compelled to adopt more robust, scientifically-grounded measurement techniques that were once the exclusive domain of data scientists and economists. Incrementality measurement, particularly methodologies based on aggregated data, is inherently more resilient in this new privacy-centric landscape because it does not depend on tracking individuals. This evolution is not a choice but a market adaptation to a new set of technical and legal constraints, forcing a move towards a more truthful assessment of advertising's impact.

The Methodological Arsenal: A Primer on Incrementality Testing

Incrementality measurement is not a single technique but a discipline grounded in scientific and statistical methods designed to isolate causality. Understanding these core methodologies is essential for evaluating the tools offered by major platforms.

Randomized Controlled Trials (RCTs): The Gold Standard

The most rigorous method for determining causality is the Randomized Controlled Trial (RCT), a concept borrowed from clinical research. In a marketing context, an RCT involves randomly splitting a target audience into two statistically identical groups:

This group is exposed to the marketing activity being measured (e.g., an ad campaign).

Control Group (or Holdout Group):

This group is intentionally withheld from the marketing activity, serving as a baseline for what would have happened organically.

Because the only systematic difference between the two groups is the ad exposure, any statistically significant difference in their outcomes (e.g., conversion rates) can be causally attributed to the marketing campaign. This difference is known as the "incremental lift". The lift is often calculated as:

Lift = (Test Conversions - Control Conversions) / (Control Conversions)

This formula yields the percentage increase in conversions directly caused by the advertising. There are several variations of RCTs used in marketing:

User-Level Holdouts:

This is the purest form of an RCT, where individual users are randomly assigned to either the test or control group. This methodology is the foundation of tools like Meta's Conversion Lift and Google's user-based Conversion Lift, providing a highly precise measure of impact.

PSA / Ghost Ads:

This is a sophisticated variation of an RCT where the control group is shown a "placebo" ad, such as a public service announcement (PSA) or a generic brand message, instead of the specific campaign ad.

This technique controls for the potential confounding effect of a user simply seeing any advertisement, thereby isolating the causal impact of the specific creative, offer, or message being tested.

Quasi-Experiments: Adapting to Real-World Constraints

In many situations, particularly with offline media or in the absence of user-level identifiers, true randomization at the individual level is not feasible. In these cases, marketers turn to quasi-experimental designs.

This is a powerful and widely used quasi-experimental method. It involves selecting two or more sets of geographic regions (e.g., cities, states, or Designated Market Areas - DMAs) that are statistically similar in terms of historical sales, demographics, and other relevant factors.

One set of regions serves as the test group, where the campaign is run, while the other serves as the control group, where the campaign is held out. By comparing the performance lift in the test geos against the control geos, marketers can measure the campaign's incremental impact at an aggregate level. The validity of this method hinges entirely on the quality of the market matching; poorly matched markets can lead to biased and unreliable results.

Model-Based Approaches: The New Frontier

A third category of incrementality measurement relies on advanced statistical modeling to estimate the causal impact without running a live experiment. These methods are particularly valuable for their "always-on" nature and their ability to analyze past events.

This broad category encompasses various algorithmic techniques that analyze historical data from noisy, multi-variant environments to draw conclusions about causality. These models attempt to mathematically construct the counterfactual—what would have happened in the absence of a marketing intervention—by analyzing patterns in time-series data and controlling for external factors like seasonality and promotions.

Synthetic Control Method:

This is a specific and highly sophisticated form of causal modeling that has gained prominence in econometrics and is now being adopted by marketing technology platforms. Instead of finding a single control region that matches the test region, the synthetic control method creates an optimal "synthetic" control by taking a weighted average of multiple unexposed units (e.g., other apps, regions). The weights are algorithmically chosen so that the synthetic control's pre-intervention performance trend perfectly mimics that of the treated unit. After the intervention, the divergence between the treated unit's actual performance and the synthetic control's projected performance represents the estimated incremental effect. This is the core methodology behind Adjust's incrementality solution.

2. Google's Measurement Ecosystem

Google's approach to incrementality is multifaceted, combining direct, in-platform experimental tools with a broader investment in open-source modeling and foundational research. This strategy aims to provide advertisers with a range of options to prove and optimize the value of their investments across Google's vast advertising portfolio.

Tool Deep Dive: Conversion Lift

Google's primary in-platform tool for incrementality measurement is Conversion Lift.

At its core, Conversion Lift is an RCT-based solution designed to measure the causal impact of Google Ads campaigns by experimentally separating an audience into test and control groups and quantifying the resulting difference in conversions. The tool is not universally available and often requires advertisers to work with their Google account representative to gain access.

A key feature of the setup process is a "feasibility rating" (High, Medium, or Low), which estimates the likelihood of achieving a statistically significant result based on the campaign's expected conversion volume. Google strongly recommends running studies only with a "High" feasibility rating to ensure conclusive outcomes.

Conversion Lift offers two distinct methodologies tailored to different campaign types and measurement needs:

This method employs a classic user-level RCT, randomly assigning individual users to either an exposed group that sees the ads or a holdback group that does not. It is well-suited for campaigns with smaller budgets and can be run in conjunction with other measurement studies like Brand Lift or Search Lift.

User-based lift is primarily available for upper-funnel campaign types such as Video, Discovery, and Demand Gen, though access for other types like Search and Performance Max may be possible through a Google representative.

The key output metrics include Incremental Conversions (the absolute number of additional conversions caused by the ads) and Relative Conversion Lift (the percentage increase in conversions).

Geography-Based Lift:

This method utilizes a geo-based experimental design, splitting the audience by geographic regions rather than individual users. It is designed for larger-scale campaigns and has the significant advantage of being able to measure impact on any conversion source, including first-party offline sales data, without relying on cookies. This makes it a more durable, privacy-resilient methodology.

Google states that this approach is built on some of its most sophisticated open-source methods, such as Trimmed Match and Time-based Regression, to ensure accurate matching and analysis of geographic areas. The primary metric for this type of test is Incremental Return on Ad Spend (iROAS), which provides a direct measure of profitability.

Beyond Experiments: Google's Broader Measurement Philosophy

Google's commitment to causal measurement extends beyond the Conversion Lift tool. The company is actively contributing to the broader field through open-source initiatives and extensive internal research, signaling a holistic approach to proving advertising value.

Meridian: Recognizing the need for portfolio-level measurement, Google has developed and open-sourced Meridian, a Bayesian Marketing Mix Modeling (MMM) code library. MMM is a top-down statistical technique that analyzes historical data to quantify the sales impact of various marketing channels and external factors.

By providing Meridian as an open-source tool, Google is empowering sophisticated advertisers and data science teams to build their own custom, transparent MMM solutions. This complements the tactical, campaign-level insights from Conversion Lift with a strategic, cross-channel view of performance.

Search Ads Pause Studies

To address one of the most persistent questions in digital marketing—the degree to which paid search cannibalizes organic traffic—Google has conducted extensive internal research. A landmark meta-analysis of over 400 "Search Ads Pause Studies" involved pausing search campaigns and observing the impact on organic clicks. The striking conclusion was that, on average, 89% of clicks generated by search ads are incremental.

This means that nearly nine out of ten visits from a paid search ad would not have been recovered through organic results had the ads been turned off. This body of research provides a powerful, data-backed justification for investment in paid search, directly countering common concerns about channel overlap and inefficiency.

In Practice: Google Incrementality Case Studies

The practical application of Conversion Lift demonstrates its ability to both validate high-performing strategies and identify areas for improvement.

A beauty brand implemented a Conversion Lift test for its Performance Max campaigns, which leverage AI to run ads across multiple Google channels.

The experiment revealed an incremental ROAS of £6 for every £1 invested, a 600% return. This provided the brand with the causal evidence needed to confidently increase its budget for the campaign, knowing that the investment was driving true, profitable growth.

Scenario 2: Inefficiency Identification

A financial institution ran a geo-based Conversion Lift test on a YouTube campaign designed to drive sales. The results showed an incremental ROAS of £1.10 for every £1 spent.

While this indicates a marginal profit, the low return signaled that the campaign was not performing as efficiently as desired. This insight served as an early warning, prompting the marketing team to revisit their creative and targeting strategies on the platform to improve its incremental contribution to business goals.

These examples illustrate the dual role of incrementality testing: it is not only a tool for justifying spend but also a diagnostic instrument for uncovering inefficiencies that correlational metrics might otherwise obscure. This aligns with a broader strategic shift by Google to make such powerful measurement tools more accessible.

Historically, incrementality testing was a complex and costly endeavor reserved for advertisers with massive budgets. However, recent moves, such as lowering the minimum experiment budget to as little as $5,000 through the use of Bayesian statistical methods, indicate a deliberate effort to democratize this capability. Bayesian approaches can draw meaningful conclusions from smaller sample sizes by incorporating prior knowledge, making tests faster and more affordable.

By integrating these tests directly into the Google Ads interface, Google is lowering both the financial and technical barriers to entry. This serves a clear strategic purpose: in an era where marketers demand causal proof of value, providing easy-to-use, in-platform tools becomes a competitive necessity to defend and grow advertising revenue.

3. Meta's Approach to Proving Value

Meta has long been at the forefront of providing advertisers with tools to measure the causal impact of their campaigns on its platforms, Facebook and Instagram. Its approach has evolved from offering classic experimental tools to pioneering a new model that integrates incrementality directly into the ad delivery system.

The Experimental Toolkit: Conversion Lift and GeoLift

Meta's foundational incrementality tools are methodologically robust and mirror the gold-standard experimental designs used across the industry.

This is Meta's primary tool for conducting user-level RCTs. When a CLS is initiated, Meta randomly divides the advertiser's target audience into a test group, which is eligible to see the ads, and a control (or holdout) group, which is not. By comparing the conversion rates between these two groups, the study calculates the incremental "lift" in conversions that can be causally attributed to the ad campaign.

Running a successful CLS requires a strong data foundation. Advertisers must have fully implemented Meta's Conversions API (CAPI) to ensure reliable server-side event tracking, maintain a high Event Match Quality (EMQ) score to accurately link events to users, and generate a sufficient volume of weekly conversions (typically at least 50–100 per test cell) to achieve statistical significance.

GeoLift

For scenarios where user-level randomization is not ideal or possible, Meta offers GeoLift, a tool for conducting matched market tests. This method involves comparing performance in geographic regions where ads are active against similar regions where ads have been paused.

GeoLift is often considered the gold standard for measuring the total, true impact of a campaign, as it can capture effects that might be missed by user-level tracking alone.

The New Paradigm: Incremental Attribution

While Conversion Lift studies provide powerful point-in-time insights, Meta's most significant recent innovation is the introduction of Incremental Attribution, a new setting within Ads Manager. This feature represents a fundamental shift from using incrementality as a periodic measurement exercise to using it as an "always-on" optimization signal.

Instead of requiring advertisers to manually set up and run individual lift tests, the Incremental Attribution setting leverages Meta's vast repository of data from a decade of running lift studies.

It uses sophisticated machine learning models, calibrated by ongoing, behind-the-scenes holdout tests, to predict in real-time which conversions are truly incremental—that is, which conversions would not have happened without ad exposure.

Strategic Implication

The true breakthrough of this feature is that it operationalizes causality. Advertisers can now select "Incremental Attribution" as a performance goal when setting up a campaign. This instructs Meta's ad delivery system to optimize not just for any conversion, but specifically for conversions that its models predict will be incremental.

The system will actively bid for users who are most likely to be influenced by an ad, rather than those who were likely to convert anyway. Early tests conducted by Meta have shown that campaigns using this setting saw an average improvement of more than 20% in incremental conversions.

This development moves incrementality from the domain of post-campaign analysis into the core of real-time, automated ad delivery. Traditional lift studies provide a report card after the fact, which a marketer must then interpret and manually translate into future strategy.

Incremental Attribution automates this feedback loop, allowing the ad auction itself to be guided by causal predictions. This has the potential to make campaign optimization far more efficient and could fundamentally change how advertisers approach performance goals on the platform, shifting the focus from the volume of conversions to the causal quality of those conversions.

In Practice: Meta Incrementality Case Studies

Real-world examples highlight both the immense power and the critical context-dependency of Meta's incremental impact.

In a widely cited example of incrementality testing's power, Uber conducted a large-scale experiment that revealed a significant portion of its spend on Meta user acquisition ads was non-incremental. The company found that in saturated urban markets, the ads were largely reaching users who would have signed up organically.

This discovery led to a $35 million reduction in their Meta ad budget, which was then reallocated to other growth initiatives like Uber Eats. This case serves as a crucial cautionary tale about the dangers of relying solely on correlational attribution models, which can mask significant inefficiencies.

The Haus "Meta Report"

Providing a powerful counterpoint to the Uber example, an analysis of 640 separate incrementality experiments conducted on the Haus platform found that Meta campaigns are, in the vast majority of cases, highly incremental. The analysis revealed that Meta drove an average lift of approximately 19% to a brand's primary KPI. Furthermore, 77 of the 100 highest-lift experiments ever run on the platform were Meta tests.

This data suggests that while inefficiencies like those found by Uber are possible, they are not the norm. Instead, Meta's incremental effectiveness is highly dependent on factors like market maturity, brand strength, and campaign strategy, reinforcing the need for every advertiser to conduct their own tests.

Hunch Creative DPA Study

A four-week Conversion Lift Study was conducted to measure the impact of creative optimization on Dynamic Product Ads (DPAs). The test compared standard DPA templates against creatives enhanced by Hunch's AI-powered tools.

The results showed that the enhanced creatives drove an incremental ROAS lift of 2.3x, demonstrating that incrementality testing is a valuable tool not just for channel validation but also for tactical optimization of creative and messaging.

4. Mobile Measurement Partner (MMP) Incrementality Solutions

Mobile Measurement Partners (MMPs) have traditionally focused on last-touch attribution for mobile app installs and events. However, as the industry shifts towards causal measurement, leading MMPs have developed their own incrementality solutions, offering distinct methodologies that reflect different philosophies on how to best measure true marketing impact.

AppsFlyer's "Incrementality" for Remarketing

AppsFlyer offers a dedicated premium tool named Incrementality, which is specifically designed to measure the value of mobile app remarketing campaigns.

The rationale for this focus is that remarketing audiences, by definition, consist of users who have already engaged with an app, making them more likely to convert organically. Therefore, isolating the true incremental impact of remarketing ads is particularly crucial.

The tool is built on a classic RCT framework. When a marketer creates an audience segment for a remarketing campaign, the Incrementality feature automatically splits that audience into a test group and a control group.

The test group is sent to the designated ad networks for targeting, while the control group is held back. The default split is typically 85% for the test group and 15% for the control group. The experiment is managed and analyzed through a dedicated Incrementality dashboard within the AppsFlyer platform.

Key Differentiator

Two Calculation Methods: A critical and nuanced feature of AppsFlyer's solution is that it offers two distinct methods for calculating lift, depending on the type of media partner involved. This reflects the different data signals available from various networks.

Intent-to-Treat (ITT)

This method, borrowed from medical science, compares the outcomes of the entire test group to the control group, regardless of whether every user in the test group was actually exposed to an ad. It measures the effect of the intention to treat the group.

This provides a more conservative and realistic measure of a campaign's overall effectiveness in real-world conditions. AppsFlyer uses the ITT method for Self-Reporting Networks (SRNs) like Meta and Google, which do not pass back granular impression-level data for every user.

Reach-Based:

This method compares the outcomes of the control group to only those users in the test group who were actually reached by an ad (i.e., served an impression). This approach more directly isolates the effect of the ad exposure itself.

However, because it excludes unreached users from the test group's denominator, it can show amplified results compared to ITT. This method is used for non-SRN ad networks that can provide the necessary impression-level data back to AppsFlyer.

Adjust's "InSight" and the Synthetic Control Method

In contrast to AppsFlyer's experimental approach, Adjust's incrementality solution, InSight, is built on a fundamentally different, model-based methodology. It is designed to provide "always-on" causal insights without the need to run live holdout experiments.

Synthetic Control Groups: InSight's core technology is the synthetic control method. Instead of creating a control group by holding back real users, it algorithmically constructs a "synthetic" control. The process works as follows:

Data Modeling

The system analyzes 12 weeks of an app's historical, aggregated data, including metrics like installs, events, ad spend, monthly active users (MAU), and daily sessions. This data is combined with aggregated data from Adjust's vast global dataset of tens of thousands of apps.

Control Group Creation

Using machine learning, InSight identifies a "donor pool" of other apps from its dataset that are similar to the target app (e.g., in the same vertical, country, and of a comparable size). It then calculates an optimal weighted average of these donor apps to create a single synthetic control group whose historical performance trend perfectly matches the target app's trend before a specific marketing action took place.

Causal Estimation

The model then projects how the synthetic control would have performed after the marketing action, based on its established trend. The difference between the target app's actual performance and the synthetic control's projected performance is calculated as the incremental lift (or cannibalization).

Use Cases

This non-disruptive approach is ideal for measuring the impact of specific, time-bound marketing actions, such as starting or stopping a campaign, launching on a new network, or significantly increasing or decreasing a budget.

Because it does not require pausing campaigns or holding out users, it avoids the opportunity cost associated with live experiments. Adjust claims its models can achieve a confidence interval of greater than 95%.

This divergence in methodologies between AppsFlyer and Adjust highlights a central debate in the field of marketing science. AppsFlyer's RCT-based tool adheres to the classical scientific method, offering high internal validity for a specific, planned experiment. Its results are a direct measurement, but they come at the cost of active intervention and provide only a point-in-time snapshot.

Adjust's model-based tool offers operational convenience and the ability to continuously analyze performance without disruption. Its results are a sophisticated estimate, however, and their accuracy is contingent on the quality of the underlying model and data. This presents marketers with a strategic choice between the rigorous, unambiguous results of a live experiment and the convenient, scalable insights from a statistical model.

In Practice: MMP Case Studies

Sleep Cycle & Adjust InSight: The popular sleep tracking app, Sleep Cycle, used Adjust's InSight to analyze its campaign performance. The model-based analysis provided granular insights that would have been difficult to obtain from a simple holdout test.

It revealed that some campaigns were causing up to 50% organic cannibalization, meaning they were paying for installs that would have happened anyway. It also identified specific ad partners that had mixed effects—providing positive lift on Android but a negative impact on iOS. These precise, causal insights enabled the team to reallocate budget away from cannibalistic activities and optimize their partner mix by platform, improving overall marketing efficiency.

Singular's Role as a Data Foundation

Enabling, Not Executing: Clarifying Singular's Position

An analysis of the five platforms reveals a critical distinction in their market positioning. While Google, Meta, AppsFlyer, and Adjust offer specific, named tools designed to execute incrementality tests or model causal impact, Singular does not offer a proprietary, standalone incrementality testing product in the same vein.

Instead, Singular's core value proposition is to serve as a next-generation MMP and marketing analytics platform that provides the essential data foundation upon which all reliable measurement, including incrementality, is built.

The Value of Clean, Unified Data: The Prerequisite for All Measurement

The central thesis for Singular's role in the incrementality ecosystem is that no measurement methodology—whether it be an RCT, a geo-test, or a causal model—can produce accurate results without a foundation of clean, complete, and trustworthy data. The "garbage in, garbage out" principle applies with particular force to causal analysis. Singular provides this foundational layer by performing several critical functions:

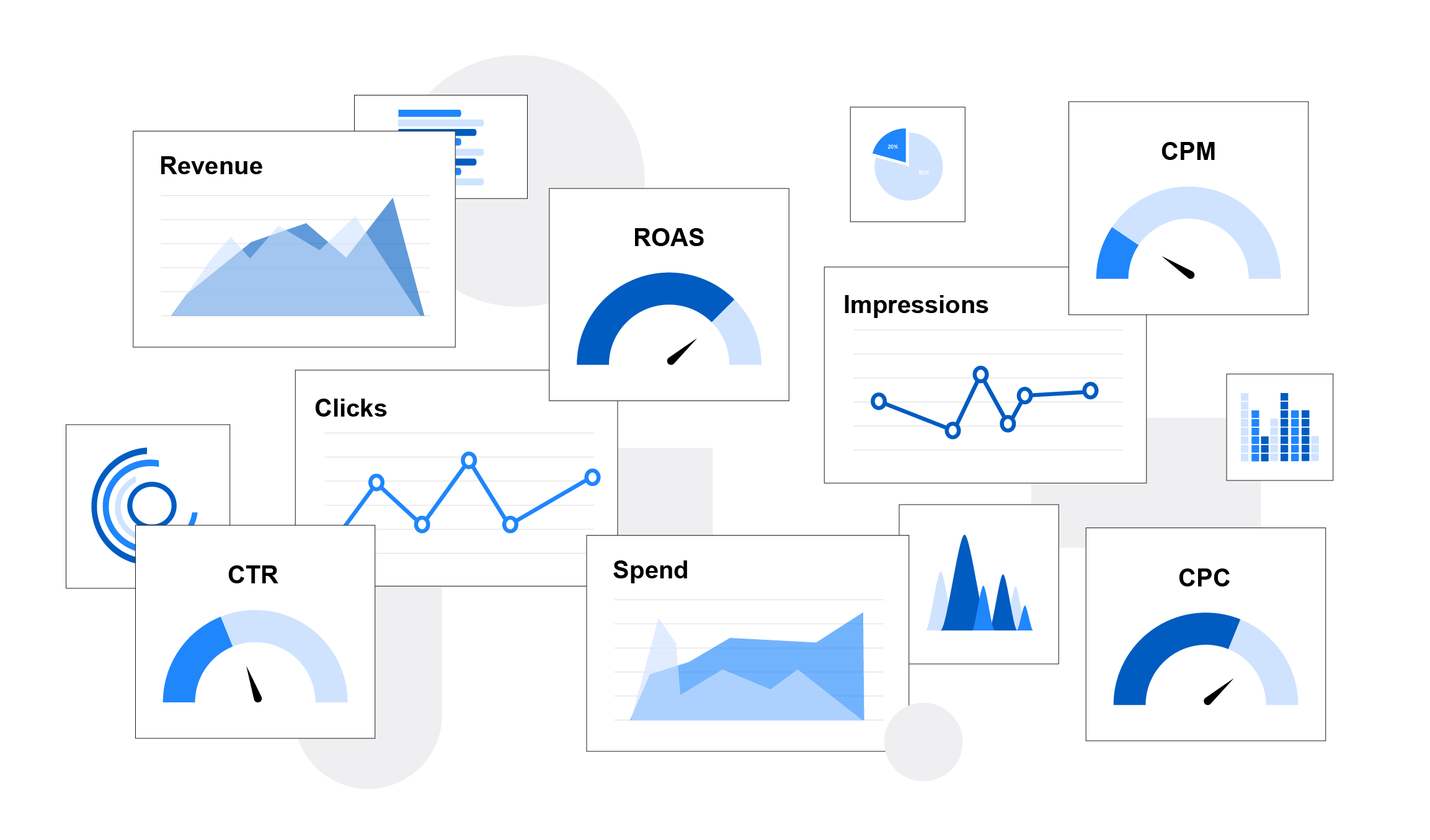

Modern marketing campaigns are spread across dozens of channels. Singular connects to thousands of sources to automatically pull and combine cost, attribution, creative, and monetization data into a single, unified source of truth. This eliminates data silos and provides the holistic view necessary for any cross-channel analysis.

Ensuring Data Integrity:

The digital advertising ecosystem is rife with ad fraud, which can severely distort performance data and lead to flawed conclusions. Singular's platform includes advanced, real-time fraud prevention that blocks fake impressions, clicks, and installs before they can contaminate the dataset being used for analysis.

Providing Granular Insights:

By unifying cost and attribution data, Singular delivers granular ROAS insights at the source, campaign, creative, and keyword levels. This detailed performance data is the raw material that marketers use to form hypotheses for their incrementality tests. For example, a marketer might observe a high ROAS for a specific campaign in Singular and then design an incrementality test in Google or Meta to determine if that ROAS is truly incremental.

Therefore, a platform like Singular should not be viewed as a competitor to the other incrementality tools discussed in this report. Rather, it is a foundational enabler. A sophisticated marketing organization would use Singular to collect, clean, and organize its data, and then use that reliable dataset to either power an experiment within Google or Meta, or to feed the causal models of a platform like Adjust.

This positioning reflects a broader structuring of the modern marketing measurement market, which is bifurcating into two distinct but complementary layers.

a. The first is the Data Aggregation & Integrity Layer, where platforms like Singular operate. Their primary function is to solve the immense challenge of data fragmentation and corruption in a complex ecosystem.

b. The second is the Causal Measurement & Experimentation Layer, where the specialized tools from Google, Meta, AppsFlyer, and Adjust reside. Their function is to apply advanced analytical techniques to the data to derive causal insights.

A marketer's measurement stack is incomplete without solutions for both layers. Attempting to conduct an incrementality test without a solid data foundation is akin to building a house on sand; the structural integrity of the conclusions will be fundamentally compromised by the poor quality of the inputs.

5. Comparative Analysis and Strategic Framework

The landscape of incrementality measurement presents marketers with a set of distinct philosophies and methodologies. Choosing the right approach requires a clear understanding of the trade-offs between experimental rigor, operational convenience, and strategic objectives. The primary strategic choice boils down to a decision between two dominant schools of thought: the experimentalists and the modelers.

Methodological Showdown: The Experimentalists vs. The Modelers

The Experimentalists (Google, Meta, AppsFlyer)These platforms champion the use of live, controlled experiments—primarily RCTs—as the most reliable way to determine causality.

1. Pros: This approach is widely considered the "gold standard" for proving a causal link. When properly designed and executed, the results have high internal validity and are scientifically defensible. The concept of comparing a test group to a control group is also relatively intuitive and easy for stakeholders to understand.

2. Cons: The primary drawback is the opportunity cost incurred by withholding advertising from the control group; by definition, the business is forgoing potential incremental conversions from that segment for the duration of the test. These experiments can also be slow to yield results, often requiring several weeks to collect enough data for statistical significance. Finally, they provide a point-in-time snapshot of performance under specific market conditions, which may not hold true indefinitely.

The Modelers (Adjust)

This approach, exemplified by Adjust's InSight tool, champions the use of advanced statistical modeling on observational data to estimate causal impact without running a live experiment.

1. Pros: The most significant advantage is the elimination of opportunity cost, as no live holdout group is required, and campaigns are not disrupted. This allows for "always-on" measurement and the ability to analyze the impact of past marketing actions. This method is operationally convenient and provides insights much more rapidly than a live experiment.

2. Cons: The accuracy of a model-based approach is entirely dependent on the validity of the model itself and the quality of the historical data used to train it. This can create a "black box" problem, where the methodology is less transparent and harder for marketers to interrogate. Furthermore, these models rely on the assumption that historical patterns are predictive of future outcomes, which may not be the case during periods of high market volatility or when launching entirely new, unprecedented strategies.

6. The Role of Mobile DSPs and Ad Networks

The mobile advertising ecosystem extends far beyond major platforms and Mobile Measurement Partners (MMPs) to encompass a diverse array of Demand-Side Platforms (DSPs) and ad networks. These vendors serve as essential drivers of user acquisition and retargeting campaigns, yet their approaches to proving incrementality differ dramatically.

The landscape divides into two distinct categories: sophisticated platforms that have built proprietary, in-platform incrementality tools to demonstrate their value, and traditional media channels that rely on external, third-party measurement solutions to validate their impact. This fundamental distinction becomes critical when marketers design comprehensive measurement strategies that accurately assess campaign performance across multiple touchpoints.

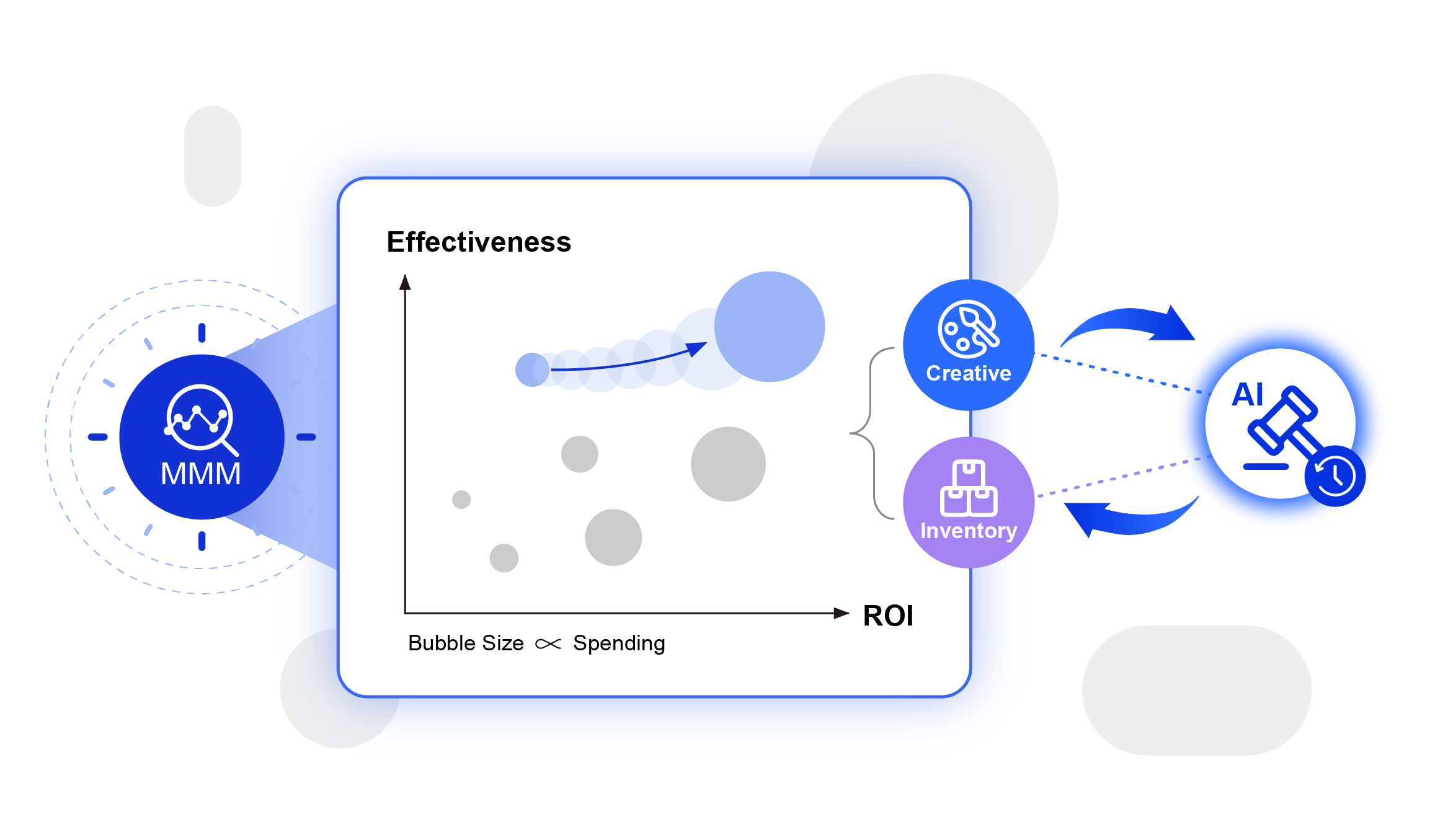

Leading this evolution, mobile DSP Appier has introduced "Agentic Incrementality," a breakthrough solution powered by our agentic AI technology. This innovation enables marketers to optimize incrementality through always-on, real-time measurement using Mixed Media Modeling (MMM) methodology, representing a significant advancement in how the industry approaches performance validation and optimization.

7. The Definitive Comparison Table

The following table synthesizes the key attributes of each platform's incrementality solution, providing a structured framework for comparison. It distills the complex landscape into a scannable, decision-making tool that allows marketing leaders to align a platform's capabilities with their specific business needs, technical resources, and strategic goals.

Methodology (Incrementality Test Type)

|

Google – Conversion Lift |

Holdout group lift studies using Meta’s ad platform. Meta splits audience into test vs. control within the campaign; measures incremental conversions or revenue caused by ads via comparison. Also supports GeoLift (open-source) for geo experiments. |

|

AppsFlyer – “Incrementality” |

Cross-network incrementality experiments with user-level test & control groups. Runs A/B tests by withholding a control group from specific ads and observing lift in conversions for the exposed group. Uses Intent-to-Treat vs. Reach-based lift calculation depending on data availability. |

|

Adjust – InSight (AI Lift) |

AI-driven incrementality analysis using causal modeling on aggregated historical data (no explicit holdout). Employs machine learning to estimate incremental vs. organic conversions per campaign. Essentially a model-based lift measurement (e.g. uses time-series, synthetic controls). |

|

Singular – (No dedicated name) |

Custom incrementality testing supported through data analytics. Marketers use methods like manual holdouts, geo-experiments, on/off testing and leverage Singular’s attribution data to measure the lift. Singular provides unified reporting to compare test vs. control performance. |

|

Appier's "Agentic Incrementality" |

Uses Media-Mix Modeling (MMM) for campaign-level incrementality tests. Measures campaign effectiveness across different ad channels, inventory categories, ad placements, and creatives for causal inference. |

Integration Requirements

|

Google – Conversion Lift |

Meta Pixel/App Events for conversion tracking. Requires a Facebook Ads Manager account with enough conversions (e.g. ~500+ events and ~$5K spend) to run a valid test. No deep integration needed – setup is within Ads Manager. |

|

AppsFlyer – “Incrementality” |

AppsFlyer SDK installed in app; use of AppsFlyer Audiences to segment users. Integration with supported ad networks (to enforce holdouts and receive impression data). For full functionality, networks should send impression and click data to AppsFlyer. |

|

Adjust – InSight (AI Lift) |

App must be integrated with Adjust (SDK) and feeding all campaign data. No special test setup; the AI analyzes existing performance data. Adjust’s Spend ingestion (SpendWorks) is recommended for accuracy. |

|

Singular – (No dedicated name) |

Singular SDK for tracking and attribution. The marketer must design experiments (e.g. pause campaigns in certain geos, create holdout audience lists). Singular’s Audience Management can help create/exclude segments on ad platforms. Data from experiments is pulled into Singular for analysis. |

|

Appier's "Agentic Incrementality" |

Requires post-back data from MMPs. With Appier's campaign data, it can measure incrementality of different creatives, inventories, and placements under the campaigns Appier manages. With other channels' campaign data, it provides a more holistic incrementality test across all channels. |

Platform Scope (Channels/OS)

|

Google – Conversion Lift |

Covers Facebook, Instagram, Audience Network ads on Android & iOS. Lift can be measured for app installs, in-app events, web conversions, etc., on Meta properties. GeoLift open-source tool can measure cross-channel geo impact. |

|

AppsFlyer – “Incrementality” |

Multi-platform: Works across 60+ ad partners (Facebook, Google, Twitter, Snap, TikTok, ad networks) simultaneously. Applicable to Android & iOS apps (AppsFlyer attribution covers both). Commonly used for retargeting campaigns, but also supports UA and even owned channels like email/SMS. |

|

Adjust – InSight (AI Lift) |

Omnichannel: Any platform tracked by Adjust (mobile ad networks, self-attributing channels, web campaigns, SKAdNetwork data) on Android & iOS can be analyzed. Designed to handle ATT limitations via aggregated SKAN data modeling. |

|

Singular – (No dedicated name) |

Cross-platform & channel: Supports Android & iOS and any ad sources integrated with Singular. Can consolidate data for Facebook, Google, Apple Search Ads, SDK networks, and even offline campaigns. Essentially channel-agnostic – the method (geo, holdout, etc.) is chosen case by case. |

|

Appier's "Agentic Incrementality" |

Multi-platforms, including mobile (Android & iOS) and web, as well as Google and Meta, provided the client can share campaign data from these channels. |

Availability & Notes

|

Google – Conversion Lift |

Offered via Meta’s Experiments tool (Test & Learn). Generally available to advertisers meeting criteria (no additional cost). New Incremental Attribution feature (2025) uses ML to continuously optimize for incremental outcomes without manual experiments. |

|

AppsFlyer – “Incrementality” |

Available as a premium solution for AppsFlyer clients. Accessible via AppsFlyer dashboard (“Incrementality” section). Requires sufficient user base to split into groups. Provides real-time reporting and raw data export for experiments. |

|

Adjust – InSight (AI Lift) |

Included for Adjust clients (part of the “Adjust Recommend” suite). Generally available – no minimum spend, but best for apps with robust data history. Delivers on-demand incrementality results with >95% confidence intervals. Helps detect organic cannibalization and lift by channel without manual experiments. |

|

Singular – (No dedicated name) |

Available to all Singular customers (no separate module; uses core analytics tools). Singular’s team often provides guidance for incrementality tests. Relies on third-party tools or manual setup for the experiment itself (e.g. using the open-source GeoLift or partner services like INCRMNTAL alongside Singular data). Emphasizes best practices in content (e.g. Growth Masterminds podcasts) rather than an out-of-box test feature. |

|

Appier's "Agentic Incrementality" |

Available to all advertisers running app advertisements in RTB channels. Provided to both Appier's clients and non-clients. |

Key Differentiator

|

Google – Conversion Lift |

"Incremental Attribution" as a real-time optimization goal, operationalizing causality. |

|

AppsFlyer – “Incrementality” |

Specialized focus on mobile remarketing with ITT vs. Reach-based calculations. |

|

Adjust – InSight (AI Lift) |

Avoids live holdouts and opportunity cost via its synthetic control model. |

|

Singular – (No dedicated name) |

Acts as the foundational data layer, enabling (but not performing) incrementality tests. |

|

Appier's "Agentic Incrementality" |

"Agentic Incrementality" serves as a real-time optimization objective without additional cost or implementation requirements. |

8. Choosing Your Measurement Approach: A Decision Framework

Marketers should select their incrementality methodology based on the strategic question they are trying to answer.

When the goal is to obtain definitive, scientifically rigorous proof of a major channel's value—for example, to justify a multi-million dollar annual budget to the C-suite—the Experimentalist approach offered by Google and Meta is the appropriate choice.

A well-designed RCT or geo-test provides the most defensible and unambiguous evidence of causal impact, making it ideal for foundational, strategic budget allocation decisions.

For Continuous Performance Monitoring & Optimization

When the objective is to get rapid, directional feedback on the impact of day-to-day campaign management—such as budget shifts, creative swaps, or pausing a specific ad set—the Modeler approach from a platform like Adjust is more practical. Its "always-on," non-disruptive nature allows for agile decision-making without the cost and time investment of a live experiment.

For Mobile App Remarketing Specialists

For teams whose primary focus is optimizing the complex dynamics of re-engaging existing app users, a Specialist tool like AppsFlyer's Incrementality is highly valuable. Its purpose-built features, such as the distinction between ITT and Reach-based calculations, provide the nuanced insights required to master this specific marketing discipline.

For All Sophisticated Marketers

Regardless of the chosen testing methodology, establishing a Data Foundation with a platform like Singular is a non-negotiable prerequisite. Ensuring that the data feeding any experiment or model is clean, complete, and unified is the only way to guarantee the reliability and accuracy of the resulting insights.

9. Reference

- What's the Difference Between Attribution vs. Incrementality? - Measured, accessed September 25, 2025, https://www.measured.com/faq/attribution-vs-incrementality/

- Incrementality vs. Attribution: What's the Difference? - Pixis, accessed September 25, 2025, https://pixis.ai/blog/incrementality-vs-attribution-what-s-the-difference/

- How attribution, incrementality, and MMM interact - Adjust, accessed September 25, 2025, https://www.adjust.com/blog/attribution-incrementality-mmm/

- Understanding Incrementality Testing - Haus.io, accessed September 25, 2025, https://www.haus.io/blog/understanding-incrementality-testing

- Marketing Incrementality Testing: A Complete Guide to Measuring What Matters, accessed September 25, 2025, https://www.rightsideup.com/blog/guide-to-marketing-incrementality-testing

- Difference Between Attribution and Incrementality - INCRMNTAL, accessed September 25, 2025, https://www.incrmntal.com/faq/what-is-the-difference-between-attribution-and-incrementality

- cassandra.app, accessed September 25, 2025, https://cassandra.app/resources/understanding-attribution-vs-incrementality-key-differences-and-applications#:~:text=Incrementality%20measures%20the%20extra%20growth,touchpoints%20led%20to%20a%20conversion.

- Understanding Attribution vs. Incrementality: Key Differences and Applications, accessed September 25, 2025, https://cassandra.app/resources/understanding-attribution-vs-incrementality-key-differences-and-applications

- The Best Methods for Incrementality Measurement - INCRMNTAL, accessed September 25, 2025, https://www.incrmntal.com/resources/the-best-methods-for-incrementality-measurement

- Incrementality Measurement Tools - Skai, accessed September 25, 2025, https://skai.io/blog/incrementality-measurement-tools/

- Use incrementality testing for effective marketing measurement - Google Business Profile, accessed September 25, 2025, https://business.google.com/us/think/measurement/incrementality-testing/

- Marketing Incrementality: How to Test & Optimize [2025] - Improvado, accessed September 25, 2025, https://improvado.io/blog/incrementality-guide

- A guide to incrementality measurement: approaches, benefits and challenges, accessed September 25, 2025, https://segmentstream.com/blog/articles/incrementality-measurement-guide

- Incrementality Testing: Calculations, Examples, and More for 2025 - inBeat Agency, accessed September 25, 2025, https://inbeat.agency/blog/incrementality-testing

- Incrementality testing 101: Marketers 2023 guide | AppsFlyer, accessed September 25, 2025, https://www.appsflyer.com/blog/tips-strategy/incrementality-testing-for-marketers/

- Get a grip on marketing incrementality - Think with Google, accessed September 25, 2025, https://business.google.com/in/think/marketing-strategies/marketing-incrementality/

- Understanding Google Ads incrementality testing | Haus, accessed September 25, 2025, https://www.haus.io/article/google-ads-incrementality-testing

- How to Conduct an Incrementality Study for Google Ads: A Step-by-Step Guide - Stella, accessed September 25, 2025, https://www.stellaheystella.com/blog/how-to-conduct-an-incrementality-study-for-google-ads-a-step-by-step-guide

- How to Conduct an Incrementality Study for Meta Ads: A Step-by-Step Guide - Stella, accessed September 25, 2025, https://www.stellaheystella.com/blog/how-to-conduct-an-incrementality-study-for-meta-ads-a-step-by-step-guide

- A guide to marketing incrementality analysis - Adjust, accessed September 25, 2025, https://www.adjust.com/resources/guides/incrementality-analysis/

- What is Incrementality? And How Do We Measure it in 2025? - INCRMNTAL, accessed September 25, 2025, https://www.incrmntal.com/resources/how-do-we-measure-incrementality

- Synthetic control method - Wikipedia, accessed September 25, 2025, https://en.wikipedia.org/wiki/Synthetic_control_method

- 15 - Synthetic Control — Causal Inference for the Brave and True - Matheus Facure, accessed September 25, 2025, https://matheusfacure.github.io/python-causality-handbook/15-Synthetic-Control.html

- InSight - Adjust Help Center, accessed September 25, 2025, https://help.adjust.com/en/article/insight

- Next-level incrementality measurement with InSight - Adjust, accessed September 25, 2025, https://www.adjust.com/blog/next-level-incrementality-measurement/

- About Conversion Lift - Google Ads Help, accessed September 25, 2025, https://support.google.com/google-ads/answer/12003020?hl=en

- support.google.com, accessed September 25, 2025, https://support.google.com/google-ads/answer/12003020?hl=en#:~:text=Incrementality%20experiments%20are%20tools%20to,the%20causal%20impact%20of%20ads.

- Set up Conversion Lift based on users - Google Ads Help, accessed September 25, 2025, https://support.google.com/google-ads/answer/12005564?hl=en

- Understand your Conversion Lift based on users measurement data - Google Ads Help, accessed September 25, 2025, https://support.google.com/google-ads/answer/14102450?hl=en

- 7 Incrementality Measurement Tools to Try in 2025 - Sellforte, accessed September 25, 2025, https://sellforte.com/blog/7-incrementality-measurement-tools-to-try-in-2025/

- For pub on TwG _ [External Playbook] Modern Measurement - Think with Google, accessed September 25, 2025, https://www.thinkwithgoogle.com/_qs/documents/18393/For_pub_on_TwG___External_Playbook_Modern_Measurement.pdf

- Incremental Clicks Impact Of Search Advertising - Google Research, accessed September 25, 2025, https://research.google/pubs/incremental-clicks-impact-of-search-advertising/

- Incremental Clicks Impact Of Search Advertising - Google Research, accessed September 25, 2025, https://research.google.com/pubs/archive/37161.pdf

- Google cuts incrementality testing budget requirements to $5,000 minimum - PPC Land, accessed September 25, 2025, https://ppc.land/google-cuts-incrementality-testing-budget-requirements-to-5-000-minimum/

- Understanding Meta incrementality testing - Haus.io, accessed September 25, 2025, https://www.haus.io/article/meta-incrementality-testing

- Incrementality testing: how to measure it on Meta - Hunch Ads, accessed September 25, 2025, https://www.hunchads.com/blog/incrementality-testing-on-meta

- Conversion Lift Study on Meta: A 101 Guide - Hunch Ads, accessed September 25, 2025, https://www.hunchads.com/blog/conversion-lift-study-on-meta

- CLS (Conversion Lift Study) - AdParlor, accessed September 25, 2025, https://adparlor.com/glossary/term/cls-conversion-lift-study/

- Meta Conversion Lift Experiment | Triple Whale Help Center, accessed September 25, 2025, https://kb.triplewhale.com/en/articles/10605805-meta-conversion-lift-experiment

- Measuring the Incrementality of Facebook Understanding Your Options - INCRMNTAL, accessed September 25, 2025, https://www.incrmntal.com/resources/measuring-the-incrementality-of-facebook

- Meta's Incremental Attribution Setting: What Is It and How Does it Work? - Disruptive Digital, accessed September 25, 2025, https://disruptivedigital.agency/metas-incremental-attribution-setting-what-is-it-and-how-does-it-work/

- What is Meta Incremental Attribution? The Complete 2025 Guide for ..., accessed September 25, 2025, https://www.threechaptermedia.com/blog/incremental-attribution-guide-2025

- Meta's incremental attribution: A step forward, but not the complete picture - TrackBee, accessed September 25, 2025, https://www.trackbee.io/blog/metas-incremental-attribution-a-step-forward-but-not-the-complete-picture

- How to Conduct an Incrementality Study on Meta's ASC Campaigns (Advantage+ Shopping Campaigns) - Stella, accessed September 25, 2025, https://www.stellaheystella.com/blog/how-to-conduct-an-incrementality-study-on-metas-asc-campaigns-advantage-shopping-campaigns

- What is incrementality testing? - Funnel.io, accessed September 25, 2025, https://funnel.io/blog/incrementality-testing

- The Meta Report: Lessons from 640 Haus Incrementality Experiments, accessed September 25, 2025, https://www.haus.io/blog/the-meta-report-lessons-from-640-haus-incrementality-experiments

- Run an Incrementality for remarketing experiment – Help Center, accessed September 25, 2025, https://support.appsflyer.com/hc/en-us/articles/360014225577-Run-an-Incrementality-for-remarketing-experiment

- Using AppsFlyer Incrementality for app remarketing, accessed September 25, 2025, https://www.appsflyer.com/use-cases/incrementality/incrementality-app-remarketing-success/

- AppsFlyer Announces Incrementality: The complete solution for measuring remarketing effectiveness - Qumra Capital, accessed September 25, 2025, https://qumracapital.com/news/appsflyer-announces-incrementality-the-complete-solution-for-measuring-remarketing-effectiveness/

- Incrementality for remarketing overview – Help Center, accessed September 25, 2025, https://support.appsflyer.com/hc/en-us/articles/360009360978-Incrementality-for-remarketing-overview

- Incrementality marketing and measurement | Adjust, accessed September 25, 2025, https://www.adjust.com/solutions/incrementality/

- Adjust InSight - SoftwareOne Marketplace, accessed September 25, 2025, https://platform.softwareone.com/product/adjust-insight/PCP-2127-8354

- Adjust Introduces InSight: AI-Powered Measurement Solution for Marketers | Techedge AI, accessed September 25, 2025, https://techedgeai.com/adjust-introduces-insight-ai-powered-measurement-solution-for-marketers/

- What is incrementality in marketing? | Singular, accessed September 25, 2025, https://www.singular.net/glossary/incrementality/

- Incrementality testing: a definitive guide for 2025 - Singular, accessed September 25, 2025, https://www.singular.net/blog/incrementality-testing/

- Mobile Measurement Partner (MMP) - Singular, accessed September 25, 2025, https://www.singular-cn.net/mmp/

- Adjust Integration - Amplitude, accessed September 25, 2025, https://amplitude.com/integrations/adjust

- Understanding and Incorporating Incrementality Testing in Marketing - Measured, accessed September 25, 2025, https://www.measured.com/blog/how-do-i-use-incrementality-tests/

- App Conversion Incrementality: LTA vs. MMM for True Growth - Kochava, accessed September 27, 2025, https://www.kochava.com/blog/how-to-determine-the-incrementality-of-your-app-conversions/