57 min read

A Complete Guide to Breaking Free from Attribution Myths and Driving True Growth in Mobile User Acquisition

Table of Contents

- Introduction: Incrementality in Mobile UA

- The Core Problem: Incrementality ≠ Last-Click Attribution

- Why This Matters Now More Than Ever

- What You'll Learn in This Guide

- Why Incrementality Matters More Than Ever in Mobile UA

- Common Implementation Barriers (And How to Overcome Them)

- The 7 Myths Debunked – A Deep Dive

- Implementing Incrementality: Practical Guides and Case Studies

- Industry-Specific Implementation Strategies

- Tools and Platform Integration Guide

- Overcoming Objections and Advanced Topics

- Conclusion: Breaking Free from Myths to Drive True Growth

- FAQ: Answering Hundreds of Long-Tail Questions

Introduction: Incrementality in Mobile UA

In a privacy-first world, last-click often tells a flattering story that doesn’t match reality. Since iOS 14.5 introduced App Tracking Transparency (ATT) in April 2021, user-level tracking on iOS requires explicit opt-in, and SKAdNetwork reports aggregate outcomes with privacy thresholds. That makes path-based attribution noisy—and incrementality testing essential.

The Core Problem: Incrementality ≠ Last-Click Attribution

What we mean by incrementality: experiments (e.g., holdouts, geo-lift, or platform lift studies) that answer, “Would these users have converted without my ads?” Platforms like Meta and Google Ads offer built-in lift experiments that compare exposed vs. control groups to isolate causal impact.

Last-click attribution, meanwhile, is a simplistic model that credits the final touchpoint before conversion, completely ignoring multi-touch user journeys and baseline behaviors. It's like giving a basketball assist to only the last pass before a score, ignoring the entire play that made it possible.

Why This Matters Now More Than Ever

When identifiers are constrained, aggregate and privacy-preserving measurement fills the gap. On Android, the Privacy Sandbox (e.g., Attribution Reporting API) provides conversion measurement without cross-party identifiers, enabling lift designs that respect privacy. These approaches won’t rebuild old user-level paths—but they will show what’s truly incremental.

Teams that adopt routine lift testing (campaign- or geo-level) typically reallocate budget away from non-incremental segments and toward audiences/creatives that move the needle. The net effect is higher incremental ROAS, but the magnitude is brand- and market-specific—measure it for your app.

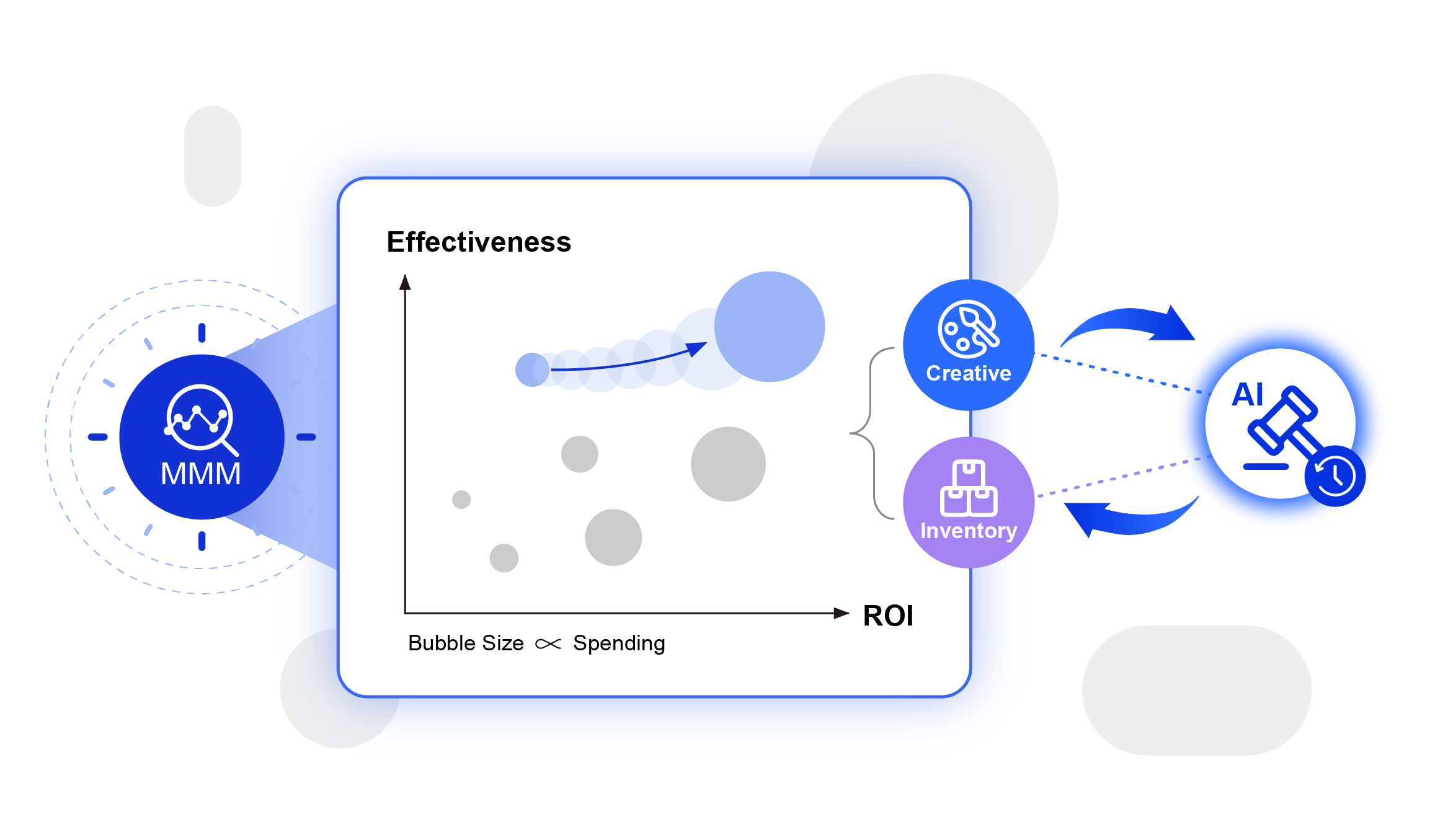

In response to the growing demand for sophisticated incrementality measurement, Appier has developed "Agentic Incrementality," a groundbreaking solution that delivers continuous, real-time incrementality testing. Powered by advanced Media Mix Modeling (MMM) methodology and our proprietary agentic AI technology, this platform enables marketers to optimize campaign incrementality dynamically rather than relying on periodic, static assessments.

This innovation represents a paradigm shift in performance validation, moving the industry toward truly adaptive campaign optimization based on live incrementality insights.

What You'll Learn in This Guide

This comprehensive resource debunks 7 critical myths that keep mobile UA teams trapped in last-click thinking, while providing practical frameworks for implementing incrementality measurement across gaming, e-commerce, and fintech apps. You'll discover:

- Why iOS 14+ made last-click attribution even less reliable

- How to run your first incrementality test on any budget

- Industry-specific implementation strategies for gaming, e-com, and fintech

- Tools and integrations that make incrementality measurement scalable

- Real frameworks for transitioning your team from last-click to incremental thinking

Why Incrementality Matters More Than Ever in Mobile UA

The Post-ATT Reality: When Last-Click Broke Down

Apple's App Tracking Transparency (ATT) didn't just change mobile marketing—it exposed how fragile last-click attribution always was. With iOS 14+ limiting data visibility, that final touchpoint you're crediting might represent less than meaningful share of the actual user journey.

The SKAdNetwork Challenge

Apple's privacy-focused framework provides aggregated campaign data but strips away the user-level insights that made last-click feel reliable. This forces a fundamental question: If you can't track individual user paths, how do you measure true marketing impact?

Android vs. iOS Incrementality

While Android still offers more granular tracking, Google's Privacy Sandbox is pushing the ecosystem toward aggregated measurement models. Forward-thinking growth teams are already building incrementality frameworks that work across both platforms.

Common Implementation Barriers (And How to Overcome Them)

"We Don't Have the Budget for Expensive Tests"

Modern incrementality testing doesn't require massive budgets. Geo-lift experiments can run for as little as $5,000, while automated A/B testing tools offer freemium options for smaller teams.

"Our Data Infrastructure Isn't Ready"

Start simple. Even basic holdout tests using existing campaign management tools can provide incrementality insights. The key is beginning the journey, not having perfect measurement from day one.

"Leadership Won't Approve Breaking What Works"

Frame it as optimization, not replacement. Run incrementality tests alongside existing attribution models to demonstrate the difference, then gradually shift budget allocation based on incremental insights.

The 7 Myths Debunked – A Deep Dive

- Myth 1: Last-Click Attribution Accurately Measures Incrementality

- Myth 2: Incrementality Tests Are Too Expensive and Time-Consuming for Mobile UA

- Myth 3: Incrementality Only Applies to Paid Media, Not Organic or Lifecycle UA

- Myth 4: High ROAS from Last-Click Means Your UA is Truly Incremental

- Myth 5: Incrementality is Irrelevant in a Cookieless World

- Myth 6: All Attribution Models Are Equally Good for Incrementality

- Myth 7: Incrementality Insights Don't Scale Across Global UA Campaigns

Myth 1: Last-Click Attribution Accurately Measures Incrementality

The Myth: "If our last-click ROAS looks good, our campaigns are driving incremental growth."Why It's Pervasive: Last-click feels intuitive—it's the simplest story to tell stakeholders and the easiest data to access. Most attribution platforms default to last-click reporting, making it the path of least resistance.

The Reality: Last-click attribution fundamentally ignores baseline user behavior and cannibalization effects. It credits your ads for users who were already on a path to convert, inflating your perceived impact while masking where your budget actually drives incremental growth.

Key Questions Answered:

1. What are the specific limitations of last-click in multi-channel UA?

Last-click creates a "winner takes all" scenario that doesn't reflect how users actually discover and engage with apps. A user might see your display ad, search for your app, then click a paid search ad before converting. Last-click gives 100% credit to paid search, zero to display—even if display was the true catalyst.

2. How can you spot over-attribution in Facebook Ads for mobile games?

Watch for these red flags: suspiciously high view-through conversion rates, ROAS that seems too good compared to industry benchmarks, or organic install rates that drop dramatically when you pause campaigns. These often indicate you're getting credit for users who would have found your game anyway.

3. Why does last-click overestimate organic lift in e-commerce apps?

E-commerce apps often see high "direct" traffic that gets attributed to the last paid touchpoint. Users who bookmark your app, have it on their home screen, or return through email marketing get credited to whatever paid campaign they last interacted with—sometimes days or weeks prior.

4. Can last-click work with probabilistic modeling?

While probabilistic models can help fill data gaps, they're still built on the flawed assumption that the last touchpoint deserves full credit. You're essentially making better guesses about a fundamentally flawed attribution model.

5. How do you audit last-click data for incrementality gaps in fintech user flows?

Compare your attribution data with organic conversion patterns during campaign pause periods. Look for discrepancies in geographic regions, user demographics, or conversion timing that suggest your paid campaigns are getting credit for organic behavior.

Actionable Takeaway:

Add a weekly baseline check (brief campaign pauses or holdout geos) to separate organic from paid outcomes. Then re-compute “true ROAS” as incremental revenue / spend.

Myth 2: Incrementality Tests Are Too Expensive and Time-Consuming for Mobile UA

The Myth: "Only big companies with massive budgets can afford incrementality testing."Why It's Pervasive: Early incrementality solutions required significant data science resources and large holdout groups that seemed to sacrifice short-term performance for long-term insights.

The Reality: Incrementality tests don’t have to be expensive. Start with a simple holdout on one campaign for 2–3 weeks; platforms like Meta and Google Ads can help automate design/analysis for eligible accounts. Budget and duration depend on your volume; your MDE (minimum detectable effect) will determine the sample sizes.

Key Questions Answered:

1. How much does incrementality testing actually cost for a mid-sized gaming app?

A comprehensive geo-lift test for a gaming app spending $50K/month on UA typically costs 5-10% of monthly spend ($2,500-$5,000) and runs for 2-3 weeks. The ROI usually pays back within the first month of optimized spending.

2. What are quick-win incrementality methods for e-commerce UA managers?

Start with holdout testing on your highest-spending campaigns. Allocate 10% of users to a control group that sees no ads for 2 weeks. Compare conversion rates between test and control groups to measure true incremental lift.

3. How do you run geo-lift tests on a $10,000 budget?

Focus on 3-5 similar geographic markets. Run campaigns in 2-3 test markets while using 1-2 similar markets as controls. Track the lift in target metrics (installs, purchases, etc.) between test and control regions. This approach works even with limited budgets.

4. What free tools can simulate incrementality for Android apps?

Google Ads offers built-in lift studies for qualified advertisers. Facebook provides brand lift studies that can approximate incrementality. Firebase A/B Testing can run holdout experiments at no additional cost beyond your existing media spend.

5. Does AppsFlyer support low-cost incrementality experiments?

AppsFlyer's Incrementality Suite offers automated testing that integrates with existing campaigns. While not free, it eliminates the need for custom data science resources and provides results within their standard attribution reporting.

6. Is there any incrementality test that doesn't require holdout testing, as I don't want to show no ads to audiences who are likely to convert?

Absolutely. Appier's Agentic Incrementality offers a breakthrough solution that eliminates the need for traditional holdout groups or campaign pauses. This always-on, real-time incrementality measurement runs continuously alongside your active campaigns, ensuring you never miss conversion opportunities by withholding ads from high-value audiences.

Unlike conventional testing methods that require splitting audiences into control and test groups, our approach uses advanced Mixed Media Modeling to measure incrementality without sacrificing potential conversions. The system provides comprehensive incrementality results for any specified campaign period while simultaneously optimizing performance in real-time, delivering both measurement insights and campaign improvements without the traditional trade-offs of holdout testing.

Cost-Benefit Analysis Framework:

|

Test Type |

Setup Cost |

Duration |

Best For |

|

Holdout Test |

$0-1,000 |

2-3 weeks |

Any budget size |

|

Geo-Lift |

$2,500-10,000 |

3-4 weeks |

Regional campaigns |

|

Ghost Bidding |

$5,000-15,000 |

1-2 weeks |

Paid search/social |

|

Synthetic Control |

$1,000-5,000 |

4-6 weeks |

Complex attribution |

Actionable Takeaway:

Your first incrementality test should be a simple holdout experiment. Pick your highest-spending campaign, hold out 10% of your target audience for two weeks, and measure the difference. The insights will pay for themselves immediately.

Myth 3: Incrementality Only Applies to Paid Media, Not Organic or Lifecycle UA

The Myth: "Incrementality testing is just for paid ads—organic traffic and lifecycle campaigns don't need this measurement."

Why It's Pervasive: Most incrementality discussions focus on paid media attribution problems, making it seem like organic channels and lifecycle campaigns operate in different measurement universes.

The Reality: Incrementality measurement is even more critical for organic and lifecycle campaigns because these channels often cannibalize each other and create complex interaction effects that traditional analytics can't capture.

Key Questions Answered:

1. How do you apply incrementality to organic search in mobile apps?

Run ASO (App Store Optimization) experiments where you test different app store elements (icons, descriptions, screenshots) across different regions while holding others constant. Measure the lift in organic installs between test and control markets to determine true ASO impact.

2. What's the incremental value of push notifications in gaming user retention?

Create randomized control groups within your user base—some receive your push campaign, others don't. Track retention, session frequency, and in-app purchase behavior between groups. Many teams discover their push campaigns have neutral or even negative incremental effects.

3. How does incrementality differ for UA vs. re-engagement campaigns?

UA incrementality asks "Would they have installed without ads?" Re-engagement incrementality asks "Would they have returned without this campaign?" The baseline behavior is different—dormant users have lower organic return rates, making re-engagement campaigns typically more incremental than UA campaigns targeting cold audiences.

4. Can you measure incremental lift from app store optimization (ASO)?

Yes, through geographic or temporal testing. Run different app store creatives in different regions, or test seasonal changes against historical baselines. The key is creating controlled conditions where you can isolate ASO impact from other marketing activities.

5. How do email and push notifications interact in incrementality testing?

Create a 2x2 test matrix: Group 1 gets email only, Group 2 gets push only, Group 3 gets both, Group 4 gets neither. This reveals not just individual channel incrementality but also whether combining channels creates synergy or cannibalization.

Cross-Channel Incrementality Framework:

Organic Search + Paid Search:

Users searching for your brand name organically may click on paid ads instead, creating cannibalization. The traditional testing approach involves pausing brand campaigns in specific regions and measuring the resulting organic install lift. Appier offers an alternative with its always-on, real-time incrementality testing that eliminates the need to pause campaigns.

Lifecycle Email + Push:

High-frequency communication can create user fatigue. Test different communication cadences and channel combinations to find the optimal incremental approach.

Referral Programs + Paid UA:

Referred users might also see your paid ads, creating double-counting. Use unique referral codes and control groups to measure true incremental lift from referral campaigns.

Actionable Takeaway:

Map your customer journey touchpoints and identify the 2-3 areas where organic and paid channels most likely overlap. Start incrementality testing there—you'll often find surprising cannibalization effects that are costing you efficiency.

Myth 4: High ROAS from Last-Click Means Your UA is Truly Incremental

The Myth: "Our campaigns show 5x ROAS in last-click attribution, so they must be driving significant incremental value."Why It's Pervasive: ROAS is the universal language of performance marketing. When stakeholders see high ROAS numbers, it creates confidence that campaigns are working effectively.

The Reality: High last-click ROAS often masks non-incremental traffic. You might be paying for users who would have converted anyway, while truly incremental campaigns show lower ROAS because they're reaching harder-to-convert audiences.

Key Questions Answered:

1. Why does last-click ROAS mislead in competitive bidding for e-commerce apps?

In competitive auction environments, algorithms optimize for users most likely to convert—often those already considering your product. This creates artificially high ROAS by targeting users with high organic conversion intent, not users who need ads to push them toward conversion.

2. How do you reconcile last-click data with incrementality reports?

Create a "true ROAS" calculation: (Incremental Revenue / Ad Spend). Compare this with your last-click ROAS. The difference represents how much you're overpaying for non-incremental traffic. Many teams discover their "5x ROAS" campaigns actually deliver 2-3x incremental ROAS.

3. What if your UA dashboard shows 5x ROAS but tests reveal zero incrementality?

This happens when you're targeting audiences with very high organic conversion intent—people who were already going to purchase. Your ads aren't changing behavior; they're just getting credit for inevitable conversions. Shift budget toward broader, colder audiences with lower last-click ROAS but higher incrementality.

4. How do you identify non-incremental traffic in high-ROAS campaigns?

Look for these patterns: very short time-to-conversion after ad click, high correlation between organic and paid traffic patterns, unusually high conversion rates compared to industry benchmarks, and dramatic organic traffic drops when campaigns are paused.

Case Study: Fintech App's ROAS Reality Check

A fintech app was celebrating 6x ROAS on their retargeting campaigns until incrementality testing revealed the truth:

Last-Click View: 6x ROAS, 2,000 monthly conversions

Incrementality Reality: 2.1x incremental ROAS, 350 truly incremental conversions

The app was spending $50,000 monthly to get credit for 1,650 users who would have converted anyway. By shifting 70% of that budget to lookalike audiences (lower last-click ROAS but higher incrementality), they maintained total conversion volume while reducing spend by 30%.

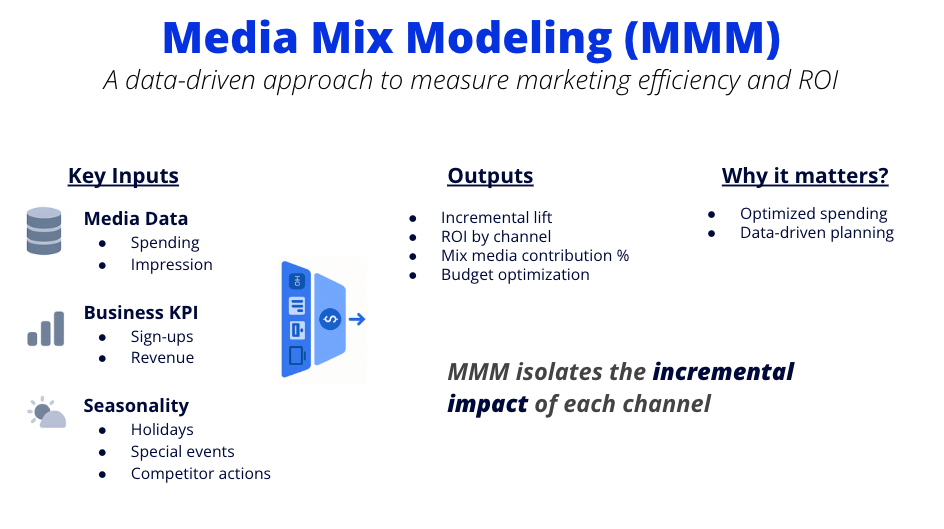

Media Mix Modeling (MMM) Integration:

How MMM Complements Incrementality:

- MMM provides top-down view of channel incrementality over time

- Incrementality testing provides bottom-up, campaign-specific insights

- Together, they create a complete picture of what's truly driving growth

When MMM and Incrementality Disagree:

- MMM might show TV driving mobile app installs, but incrementality tests show specific mobile campaigns are non-incremental

- This often indicate

Actionable Takeaway:

For every campaign with ROAS above 4x, run a 2-week holdout test with 15% of your audience. High-ROAS campaigns are the most likely to hide non-incremental traffic, and the budget savings from optimization usually exceed the short-term performance dip.

Myth 5: Incrementality is Irrelevant in a Cookieless World

The Myth: "Privacy changes make incrementality measurement impossible, so we might as well stick with available attribution data."

Why It's Pervasive: Privacy regulations and platform changes have limited data availability, making incrementality testing seem even more complex when basic attribution already feels broken.

The Reality: Privacy changes make incrementality more important. SKAdNetwork (iOS) and Attribution

Reporting(Android/Chrome) are designed to protect user privacy via aggregate reporting—compatible with geo- or time-based lift studies and platform-run conversion-lift. [2,4–6,12]

MMM (top-down) and lift tests (bottom-up) work together: MMM explains channel contributions over time; lift tests validate causal impact at the campaign/audience level. Google’s open-source MMM,

Meridian, became generally available on January 29, 2025 and supports Bayesian causal modeling and geo-level calibration.

Key Questions Answered:

1. How do you measure incrementality without IDFA in iOS UA?

Use aggregated testing methods: geographic lift studies, time-based holdouts, and SKAdNetwork's conversion value mapping. Focus on cohort-level analysis rather than user-level tracking. Many teams find these approaches more reliable than IDFA-dependent attribution ever was.

2. What's the future of incrementality in Google's Privacy Sandbox?

Privacy Sandbox's Topics API and Attribution Reporting API are designed specifically for incrementality measurement. The aggregated reporting protects user privacy while enabling campaign lift measurement through differential privacy techniques.

3. For gaming apps, how does server-side attribution enable incrementality?

Server-side attribution captures conversion events that happen within your app environment, regardless of client-side privacy restrictions. This creates more complete funnel data for incrementality testing, especially for in-app purchase and retention metrics.

4. How do you handle incrementality in web-to-app conversions post-cookies?

Use probabilistic matching combined with first-party data. Create unique promotional codes or landing pages for different campaign audiences, then track app install and conversion patterns. The key is building attribution based on campaign exposure, not individual user tracking.

Privacy-Safe Incrementality Methods:

Differential Privacy Experiments

Add statistical noise to individual conversion events while maintaining accurate aggregate insights. This approach works within Apple's Private Click Measurement and Google's Privacy Sandbox frameworks.

Synthetic Control Groups

Use machine learning to create artificial control groups based on user behavior patterns rather than individual user identifiers. This method provides incrementality insights while respecting user privacy.

Geo-Temporal Testing

Combine geographic and temporal controls to isolate campaign effects. Run campaigns in specific regions during specific time periods, using similar regions and time periods as controls.

Platform-Specific Approaches:

iOS Post-ATT:

- SKAdNetwork conversion values for incrementality tracking

- Geo-lift studies using campaign flight timing

- Cohort analysis of app store performance metrics

Android Privacy Sandbox:

- Attribution Reporting API for aggregated incrementality

- Topics API for privacy-safe audience testing

- Chrome's Trust Tokens for cross-app measurement

Actionable Takeaway:

Stop waiting for privacy-safe attribution to get "back to normal." Start building incrementality measurement that works within privacy constraints now—it's more reliable than cookie-dependent attribution ever was, and it's the foundation for sustainable growth in a privacy-first future.

Myth 6: All Attribution Models Are Equally Good for Incrementality

The Myth: "It doesn't matter which attribution model we use—first-click, last-click, or multi-touch all give us the same incrementality insights."

Why It's Pervasive: Attribution model selection often feels like an academic exercise rather than a business-critical decision. Many teams stick with platform defaults without understanding how model choice affects incrementality measurement.

The Reality: Attribution models create vastly different pictures of campaign incrementality. Data-driven attribution and time-decay models typically align more closely with true incrementality than first-click or last-click, but even sophisticated models can miss incrementality entirely.

Key Questions Answered:

1. Which attribution model best aligns with incrementality for fintech apps?

Data-driven attribution typically performs best for fintech because it considers the typical long consideration periods and multiple touchpoints in financial decision-making. However, it should be validated against holdout tests since it can still credit non-incremental touchpoints.

2. How do you transition from last-click to multi-touch in mobile UA?

Implement dual tracking: continue reporting last-click for consistency while building multi-touch measurement capabilities. Run parallel campaigns using both models to understand the difference, then gradually shift budget allocation based on multi-touch insights validated through incrementality testing.

3. What's the difference between Shapley value attribution and incrementality?

Shapley value attribution distributes conversion credit based on each touchpoint's marginal contribution to the outcome. While more sophisticated than last-click, it still doesn't answer whether the conversion would have happened without marketing. Incrementality testing measures whether marketing changed user behavior at all.

4. Do MMPs like Adjust or AppsFlyer offer incrementality-focused models?

Mobile Measurement Partners (MMPs) built their foundations on last-touch attribution models, primarily tracking mobile app installs and post-install events.

As the mobile marketing landscape has evolved toward causal measurement and privacy-first approaches, leading MMPs have recognized the limitations of traditional attribution and pivoted to develop sophisticated incrementality solutions.

These platforms now offer distinct methodologies that reflect fundamentally different philosophies about measuring true marketing impact beyond correlation-based metrics. Each MMP has crafted unique approaches to incrementality testing, from controlled experiment designs to statistical modeling techniques, creating a diverse ecosystem of measurement options for marketers.

This comprehensive analysis examines the incrementality testing methodologies employed by major MMPs alongside the established approaches from Google and Meta, providing marketers with the insights needed to select the most appropriate measurement framework for their specific needs.

Attribution Model Comparison Framework:

|

Model Type |

Incrementality Alignment |

Best Use Case |

Limitations |

|

Last-Click |

Poor |

Direct response |

Ignores journey complexity |

|

First-Click |

Poor |

Brand awareness |

Overvalues discovery |

|

Linear |

Moderate |

Long consideration |

Equal weight assumption |

|

Time-Decay |

Good |

Short cycles |

May undervalue discovery |

|

Position-Based |

Good |

Balanced approach |

Arbitrary weight distribution |

|

Data-Driven |

Best |

Sufficient data |

Still attribution, not incrementality |

Advanced Attribution Considerations:

Cross-Device Attribution: Users often discover apps on one device and install on another. Cross-device models provide better user journey understanding but still don't measure whether marketing changed behavior.

View-Through Attribution: Impression-based attribution can capture upper-funnel influence but often inflates incrementality by crediting ads that users never consciously noticed.

Post-Install Attribution: In-app event attribution becomes critical for measuring lifetime value incrementality, not just install incrementality.

Actionable Takeaway:

Audit your current attribution model by running the same campaigns through different attribution lenses. The differences in reported performance often reveal which touchpoints are getting undeserved credit. Use this analysis to inform your incrementality testing strategy.

Myth 7: Incrementality Insights Don't Scale Across Global UA Campaigns

The Myth: "Incrementality testing works for local campaigns, but global campaigns are too complex for meaningful incrementality measurement."

Why It's Pervasive: Global campaigns involve multiple cultures, currencies, competitive landscapes, and user behaviors. It seems impossible to create controlled experiments across such diverse conditions.

The Reality: Global incrementality measurement is not only possible but essential for efficient international growth. Machine learning models and regional holdout strategies can provide scalable incrementality insights that account for local market differences.

Key Questions Answered:

1. How do you apply incrementality to international UA in e-commerce?

Create regional control groups within each major market. Run incrementality tests in 2-3 countries per region while using similar countries as controls. Use local currency and purchasing power adjustments to normalize results across markets.

2. What challenges arise in scaling incrementality for gaming apps in APAC vs. North America?

APAC markets often have higher organic discovery rates through app store features and social sharing, making baseline conversion rates higher. North American markets typically have more competitive paid media environments. Adjust your incrementality baselines by region and validate with local holdout tests.

3. How do you adjust for cultural differences in incrementality baselines?

Map local user behaviors: social sharing patterns, app discovery preferences, payment methods, and seasonal shopping cycles. Build region-specific incrementality models rather than applying global averages. What's incremental in Germany might be baseline behavior in South Korea.

Global Incrementality Framework:

Phase 1: Regional Mapping

1. Identify baseline organic behavior by region

2. Map competitive intensity and user acquisition costs

3. Understand local user journey differences

Phase 2: Tiered Testing

Tier 1: Major markets with full incrementality testingTier 2: Secondary markets with simplified holdout tests

Tier 3: Emerging markets with campaign pause analysis

Phase 3: Machine Learning Scaling

1. Use successful tests from similar markets to predict incrementality2. Build algorithmic optimization based on regional incrementality patterns

3. Automate budget allocation using incremental ROAS by geography

Cultural Considerations by Region:

1. Europe: GDPR compliance affects data collection; higher privacy awareness impacts baseline attribution2. APAC:Higher mobile-first usage, different social platform preferences, varying app store dynamics

3. Americas: More mature digital advertising, higher baseline ad awareness, different seasonal patterns

MEA: Emerging smartphone adoption, different payment preferences, varying internet connectivity

Actionable Takeaway:

Start global incrementality measurement with your top 3 revenue-generating countries. Run identical test structures in each market to understand regional differences, then use those insights to optimize your global budget allocation. The investment in regional understanding pays dividends in campaign efficiency.

Implementing Incrementality: Practical Guides and Case Studies

Step-by-Step Framework: Your First Incrementality Test: The 10-Step Mobile UA Incrementality Blueprint

Step 1: Baseline Measurement (Week -2)

Document your current organic conversion rates, seasonal patterns, and campaign performance across all channels. This baseline is crucial for measuring incremental lift accurately.

Step 2: Campaign Selection (Week -1)

Choose campaigns representing 10-15% of your total spend for testing. Avoid your absolute best or worst performers—pick campaigns with consistent, middle-tier performance for cleaner results.

Step 3: Control Group Design (Week -1)

Create matched control groups using either geographic regions, user cohorts, or random assignment. Ensure test and control groups have similar historical performance patterns.

Step 4: Measurement Setup (Week 0)

Implement tracking for both test and control groups. Set up automated reporting to capture daily metrics throughout the test period.

Step 5: Test Launch (Week 0)

Launch campaigns in test groups while completely suppressing ads to control groups. Maintain normal campaign optimization within test groups.

Step 6: Daily Monitoring (Weeks 0-2)

Track performance daily but avoid making optimization decisions until the test concludes. Document any external factors that might affect results.

Step 7: Statistical Analysis (Week 3)

Calculate incremental lift: (Test Group Performance - Control Group Performance) / Control Group Performance. Ensure statistical significance before drawing conclusions.

Step 8: Business Impact Calculation (Week 3)

Convert incremental lift into revenue impact and true ROAS. Factor in the cost of not showing ads to the control group.

Step 9: Scaling Decision (Week 4)

Based on incrementality results, decide whether to scale up, optimize, or pause campaigns. Create a rollout plan for applying insights.

Step 10: Continuous Optimization (Ongoing)

Establish quarterly incrementality testing cycles. As you gather more data, refine your testing methodology and expand to additional campaigns.

Industry-Specific Implementation Strategies

Gaming Apps: Incrementality in Hyper-Casual vs. Mid-Core

a. Hyper-Casual Gaming Incrementality:

Challenge:

Short user lifecycles and high organic discovery rates make incrementality measurement critical but difficult.

Approach:

Focus on Day 1 retention and early monetization metrics rather than long-term LTV. Use 48-72 hour test periods for faster insights.

Key Metrics:

1. Incremental Day 1 retention

2. Incremental session frequency in first 24 hours

3. Incremental ad revenue per user (for ad-monetized games)

4. Time-to-first-purchase for IAP games

Testing Framework:

Geographic holdouts work well since hyper-casual games typically have global, undifferentiated audiences. Test in 3-5 similar countries while holding out 2-3 as controls.

b. Mid-Core Gaming Incrementality:

Challenge:

Longer consideration periods and higher baseline engagement make it harder to isolate campaign impact.

Approach:

Extended testing periods (2-4 weeks) focusing on engagement depth and monetization progression.

Key Metrics:

1. Incremental tutorial completion rates

2. Incremental progression through early game levels

3. Incremental first purchase timing and value

4. Incremental social feature engagement

Testing Framework:

User cohort holdouts based on registration timing work better than geographic splits, since mid-core games often have distinct regional preferences.

E-Commerce: Measuring Incremental Cart Additions from UA

a. Shopping App Incrementality Strategy:

Pre-Purchase Metrics:

1. Incremental app installs leading to first browse session

2. Incremental product page views per session

3. Incremental cart additions within first 7 days

4. Incremental wishlist/favorite actions

Purchase Metrics:

1. Incremental first purchase rate and timing

2. Incremental average order value

3. Incremental purchase frequency over 30-60 days

4. Incremental customer lifetime value

Seasonal Considerations

E-commerce incrementality varies dramatically by season. Black Friday/Cyber Monday periods show different incrementality patterns than regular shopping periods. Test during both peak and off-peak periods.

Testing Methodology

Combine geographic and temporal controls. Run campaigns in test markets during specific promotional periods while using similar markets and time periods as controls.

b. Fintech: Incrementality in Compliance-Heavy User Onboarding

Fintech Incrementality Challenges:

Regulatory Constraints:

Financial services marketing is heavily regulated, limiting creative testing and audience targeting options.

Long Consideration Cycles:

Users often research financial products extensively before converting, making attribution windows crucial.

High Security Requirements:

KYC (Know Your Customer) and AML (Anti-Money Laundering) requirements affect user onboarding flows and conversion measurement.

c. Fintech Incrementality Framework:

Awareness Stage:

1. Incremental educational content engagement

2. Incremental app downloads from financial literacy content

3. Incremental email signups for financial guides

Consideration Stage:

1. Incremental account creation initiations

2. Incremental document upload completions

3. Incremental customer service contact rates

Conversion Stage:

1. Incremental KYC completion rates

2. Incremental first deposit/transaction timing

3. Incremental product adoption (checking, savings, investments)

Retention Stage:

1. Incremental monthly active usage

2. Incremental additional product adoption

3. Incremental referral generation

Tools and Platform Integration Guide

For an in-depth comparative analysis of incrementality testing methodologies across leading Mobile Measurement Partners, explore our comprehensive guide: "The Incrementality Imperative: A Comparative Analysis of Measurement Tools from Google, Meta, AppsFlyer, Adjust, and Singular."

This detailed examination breaks down the unique approaches, strengths, and limitations of each platform's incrementality solutions to help you make informed measurement decisions.

AppsFlyer Incrementality Suite:

1. Capabilities: Automated holdout testing, geo-lift studies, audience suppression experiments

2. Best For: Teams wanting automated incrementality without data science resources

3. Pricing: Premium feature available to subscribers. Pricing available upon request.

4. Integration: Works with existing AppsFlyer implementation, no additional SDK required

Adjust Incrementality Studies:

1. Capabilities: Cohort-based testing, cross-platform incrementality, MMM integration

2. Best For: Apps with significant cross-platform user bases

3. Pricing: Paid add-on ('Growth Solution'). Pricing available upon request.

4. Integration: Requires Adjust Automate for full functionality

Singular's Custom incrementality testing:

1. Capabilities: MMM-powered incrementality, global campaign optimization, cross-channel attribution

2. Best For: Enterprise teams managing complex, multi-channel campaigns

3. Pricing: N/A (Data foundation platform).

4. Integration: Comprehensive data pipeline setup required

Custom Implementation: Building Your Own Incrementality Framework

Required Resources:

1. Data analyst/scientist (0.5-1.0 FTE)

2. Campaign management tool with audience suppression

3. Statistical analysis software (R, Python, or advanced Excel)

4. Business intelligence dashboard for ongoing reporting

Implementation Timeline:

Month 1: Data infrastructure setup, baseline measurement implementation

Month 2: First incrementality test design and launch

Month 3: Results analysis and optimization framework development

Month 4+: Automated testing cycles and continuous optimization

Cost Estimate: $15,000-30,000 for initial setup, $5,000-10,000 monthly ongoing costs

Overcoming Objections and Advanced Topics

Addressing Stakeholder Skepticism

1. "But Our Last-Click Data Shows We're Profitable"

The Response Framework:

Acknowledge Current Performance: "Yes, our campaigns are generating revenue, but we need to understand how much is truly incremental."

Quantify the Risk: "Industry data shows 20-40% of attributed revenue would have occurred anyway. On our $X budget, that's potentially $Y in wasted spend."

Propose Low-Risk Testing: "Let's run a small incrementality test on 10% of one campaign to validate our attribution before scaling further."

Show Competitive Advantage: "Companies using incrementality measurement typically see 25% better budget efficiency. This gives us a significant edge."

2. "We Don't Have Time for Complex Testing"

Time-Efficient Implementation:

Week 1: Set up automated holdout testing using existing MMP features

Week 2-3: Let the test run with minimal management overhead

Week 4: Analyze results and implement initial optimizations

Total Time Investment: 4-6 hours across 4 weeks

Automation Options:

1. Most MMPs offer "set and forget" incrementality testing

2. Results integrate into existing reporting dashboards

3. Optimization recommendations are generated automatically

3. "What If Incrementality Tests Hurt Our Performance?"

Risk Mitigation Strategies:

1. Start Small: Test with 5-10% of budget, not entire campaigns

2. Choose Stable Campaigns: Avoid testing during major product launches or seasonal peaks

3. Plan for Learning Value: Even "failed" tests provide valuable incrementality insights

4. Short Test Windows: Most tests need only 2-3 weeks for statistical significance

5. Expected Performance Impact: Temporary 5-15% performance dip during testing typically leads to 20-40% efficiency improvements post-optimization

Advanced Incrementality Concepts

AI-Driven Incrementality Optimization

Machine Learning Applications:

1. Predictive Incrementality Modeling: Use historical incrementality test results to predict which audiences, creatives, and channels are most likely to drive incremental conversions without running new tests.

2. Real-Time Optimization: AI algorithms can adjust bidding and budget allocation based on predicted incrementality scores, optimizing for incremental rather than attributed conversions.

3. Cross-Campaign Learning: Machine learning models can identify patterns across different incrementality tests, helping predict outcomes for untested campaigns based on similar characteristics.

Implementation Approaches:

1. Incremental Bidding Models:

a. Adjust bid amounts based on audience incrementality scores

b. Higher bids for high-incrementality audiences, lower bids for likely non-incremental traffic

c. Platforms like Google and Facebook are developing incrementality-aware bidding options

2. Creative Incrementality Scoring:

a. Test different ad creatives for incrementality, not just conversion rate

b. Some creative approaches drive higher baseline awareness, making subsequent ads less incremental

c. AI models can predict creative incrementality based on visual and message elements

3. Adaptation Strategies:

a. Wallet-Based Attribution: Use blockchain wallet addresses as persistent identifiers for incrementality testing, respecting user privacy while enabling measurement.

b. Community Baseline Measurement: Establish baseline conversion rates within different Web3 communities before measuring campaign incrementality.

c. Token Incentive Controls: Create control groups that receive non-token incentives to isolate the incremental effect of cryptocurrency rewards.

Privacy-First Future: Incrementality in 2026 and Beyond

Emerging Technologies:

1. Federated Learning for Incrementality: Models that learn from aggregated data across multiple advertisers without sharing individual user data, improving incrementality predictions while maintaining privacy.

2. Differential Privacy Integration: Statistical techniques that add calculated noise to datasets, enabling incrementality measurement while making individual user behavior impossible to identify.

3. Synthetic Data Generation: AI-generated datasets that maintain statistical properties of real user behavior for incrementality testing without using actual personal data.

Regulatory Considerations:

1. Global Privacy Harmonization: As privacy regulations align globally, incrementality measurement techniques that work across GDPR, CCPA, and emerging regulations will become crucial.

2. First-Party Data Maximization: Incrementality testing will increasingly rely on first-party data collected directly by apps, reducing dependence on third-party tracking.

3. Consent-Based Measurement: Users who opt into data sharing may participate in incrementality testing, while privacy-focused users contribute to aggregate insights through differential privacy.

Advanced Metrics and KPI Frameworks:

Beyond Basic Incrementality: Sophisticated Measurement

1. Incremental Customer Lifetime Value (iCLV):

a. Measures the incremental lifetime value generated by marketing campaigns

b. Accounts for both incremental acquisition and incremental behavior changes in existing users

c. Formula: iCLV = (Incremental Users × Average LTV) + (All Users × Incremental LTV per User)

2. Incrementality Velocity:

a. Measures how quickly incremental effects manifest and decay

b. Critical for determining optimal campaign flight durations and budget allocation timing

c. Helps distinguish between immediate incremental effects and long-term brand building

3. Cross-Channel Incrementality Attribution:

a. Measures how campaigns in one channel affect incrementality in others

b. Accounts for complementary effects (channels that make each other more effective)

c. Identifies cannibalization effects (channels that reduce each other's effectiveness)

Statistical Rigor in Incrementality Testing

1. Power Analysis for Test Design:

a. Calculate required sample sizes for detecting meaningful incrementality differences

b. Account for baseline conversion rates, expected lift sizes, and statistical confidence levels

c. Avoid underpowered tests that waste budget without generating actionable insights

2. Multiple Testing Corrections:

a. When running multiple incrementality tests simultaneously, adjust significance thresholds

b. Use Bonferroni corrections or false discovery rate controls to avoid false positives

c. Critical for teams running many concurrent experiments

3. Confidence Intervals and Effect Sizes:

a. Report incrementality results with confidence intervals, not just point estimates

b. Focus on effect sizes (magnitude of incrementality) rather than just statistical significance

c. Small but statistically significant effects may not justify optimization costs

Conclusion: Breaking Free from Myths to Drive True Growth

The Path Forward: From Attribution to Incrementality

The seven myths we've debunked represent more than measurement mistakes—they're strategic blind spots that prevent mobile UA teams from achieving their full potential. Companies that break free from last-click thinking and embrace incrementality measurement don't just improve their ROAS; they fundamentally transform how they approach growth.

The Immediate Actions

Every VP of Growth and Head of UA should audit their current attribution setup against these myths within the next 30 days. Start with Myth #4—if your highest-ROAS campaigns haven't been tested for incrementality, you're likely leaving money on the table.

The Long-Term Vision

Incrementality measurement isn't a nice-to-have analytical exercise; it's the foundation for sustainable growth in a privacy-first, competitive mobile ecosystem. Teams that master incremental thinking will have decisive advantages in budget efficiency, audience targeting, and channel optimization.

Key Takeaways for Different Roles

For VPs of Growth:

Incrementality measurement should be a board-level KPI, not a data team side project

Budget allocation decisions based on incremental ROAS typically outperform last-click ROAS by 25-40%

The competitive advantage comes from finding incremental growth opportunities competitors miss

For Heads of UA:

Start incrementality testing with your highest-spend campaigns—they're most likely to reveal non-incremental traffic

Build incrementality testing into your quarterly campaign review process

Use incrementality insights to negotiate better rates with ad platforms based on true performance

For Growth PMs and Analysts:

Incrementality measurement requires different statistical approaches than standard A/B testing

Focus on building incrementality testing capabilities that scale across campaigns and channels

Create automated reporting that shows both attributed and incremental performance side-by-side

The Future of Mobile UA: An Incrementality-First World

AI-Powered Optimization:

The next generation of mobile UA tools will optimize for predicted incrementality, not just conversion likelihood. Early adopters of these approaches will have significant competitive advantages.

Cross-Platform Integration:

As user journeys become more complex across devices and platforms, incrementality measurement will be the only reliable way to understand true marketing impact.

Privacy-Safe Growth:

Incrementality testing works within privacy constraints better than individual user tracking, making it the sustainable approach for long-term growth.

Your Next Steps

This Week: Audit your top 3 campaigns against Myth #4. If they show >4x ROAS, they need incrementality testing.

This Month: Implement your first holdout test using the 10-step framework in Section 3.

This Quarter: Expand incrementality testing to cover 20-30% of your total UA spend.

This Year: Build incrementality measurement into your standard campaign optimization process.

The mobile UA teams that embrace incrementality measurement now will be the ones driving sustainable, profitable growth while their competitors waste budget on non-incremental traffic.

The question isn't whether you should adopt incrementality measurement—it's whether you can afford not to. In a world where every marketing dollar counts, incrementality isn't just better measurement; it's your competitive advantage.

FAQ: Answering Hundreds of Long-Tail Questions

Basics and Fundamentals

- Q: What's the simplest way to explain incrementality to executives who only understand ROAS? A: "Incrementality measures the users who wouldn't have converted without your ads, while ROAS measures all users who converted after seeing your ads—including those who would have converted anyway. True ROAS should only count incremental users."

- Q: How long does it take to see results from incrementality testing? A: Most incrementality tests provide actionable insights within 2-4 weeks. However, implementing optimizations based on those insights and seeing full ROI typically takes 2-3 months.

- Q: Can small apps with limited budgets still benefit from incrementality testing? A: Yes. Start with simple holdout tests using 10% of your audience on your highest-spend campaigns. Even apps spending $5,000/month can run meaningful incrementality tests.

- Q: What's the difference between incrementality and lift studies? A: Lift studies typically measure brand awareness or intent changes, while incrementality measures actual behavioral changes (installs, purchases, retention). Incrementality is more directly tied to business outcomes.

- Q: How does incrementality testing work for apps with very long sales cycles? A: Use early-funnel metrics as proxies (email signups, account creations, demo requests) and model their correlation to eventual conversions. Extend test windows to 6-8 weeks for more complete data.

Implementation and Technical Questions

- Q: How do you run incrementality tests on Meta Ads for fintech apps with under 10,000 users? A: Use Facebook's Conversion Lift tool, which works with smaller audiences. Focus on key conversion events (account creation, first deposit) rather than downstream metrics that need larger sample sizes.

- Q: What's the difference between ghost bidding and holdout testing? A: Ghost bidding participates in auctions without showing ads, measuring who would have been reached. Holdout testing randomly excludes users from campaigns entirely. Ghost bidding is more precise but requires platform support.

- Q: Can incrementality be measured retroactively on historical campaign data? A: Limited retrospective analysis is possible using synthetic control methods or comparing campaign-on vs campaign-off periods, but prospective testing with proper control groups is always more reliable.

- Q: How does incrementality apply to subscription-based apps like streaming services? A: Focus on incremental subscriber acquisition and incremental subscription duration. Test whether campaigns drive users who wouldn't have subscribed organically, and whether they stay subscribed longer.

- Q: What sample sizes do you need for statistically significant incrementality tests? A: Depends on baseline conversion rates and expected lift. Generally, you need 1,000+ conversions in each test cell for statistical significance, though this can be lower for higher-lift scenarios.

- Q: How do you handle seasonality in incrementality testing? A: Use year-over-year comparisons for seasonal businesses, run tests during both peak and off-peak periods, or use synthetic control methods that account for seasonal trends in the control group selection.

- Q: Does Firebase Analytics support incrementality experiments for free? A: Firebase A/B Testing can create holdout groups and measure conversion differences, providing basic incrementality insights. However, statistical analysis and significance testing require manual calculation.

- Q: How do you measure incrementality for influencer marketing campaigns? A: Use unique promo codes or landing pages per influencer, combined with geographic or temporal controls. Compare conversion rates in markets/periods with influencer campaigns vs. without.

- Q: What's the relationship between incrementality and attribution windows? A: Longer attribution windows capture more conversions but may include less incremental users (those who would have converted organically over time). Test different windows to find the optimal balance.

- Q: How do you run incrementality tests across multiple ad platforms simultaneously? A: Create matched control groups that see no ads on any platform, then measure lift vs. groups seeing campaigns on different platform combinations. This reveals cross-platform incrementality and cannibalization.

Industry-Specific Applications

- Q: How does incrementality measurement differ for B2B vs. B2C mobile apps? A: B2B incrementality focuses on lead quality and sales cycle acceleration rather than just volume. Test whether campaigns generate leads that are more likely to close and close faster.

- Q: For gaming apps, how do you measure incrementality of in-app purchase campaigns targeting existing users?A: Compare spending behavior between users who see IAP promotion ads vs. control groups who don't. Focus on incremental revenue per user and purchase timing acceleration.

- Q: How do you apply incrementality to app store optimization (ASO) efforts? A: Run A/B tests of app store elements (screenshots, descriptions) in different geographic markets while using similar markets as controls. Measure organic install lift between test and control regions.

- Q: What's the best way to measure incrementality for referral programs in mobile apps? A: Create control groups that don't receive referral program invitations, then measure both direct referrals and secondary effects (increased engagement from referrers).

- Q: How does incrementality work for location-based apps like food delivery? A: Use geographic micro-targeting to create test and control neighborhoods, measuring incremental order volume and user activation within specific delivery zones.

- Q: For e-commerce apps, how do you separate incrementality of discovery vs. conversion campaigns? A: Run sequential testing: measure incrementality of discovery campaigns (view-through effects), then measure conversion campaign incrementality among users already aware of your app.

Advanced Measurement and Analytics

- Q: How do you calculate incremental customer lifetime value (LTV)? A: Formula: Incremental LTV = (Incremental Users × Average LTV) + (All Users × Incremental Behavior LTV). The second part captures how campaigns change behavior of existing users.

- Q: What's the difference between Shapley value attribution and incrementality measurement? A: Shapley value distributes credit among touchpoints based on marginal contribution, but still attributes conversions that would have happened anyway. Incrementality measures whether marketing changed behavior at all.

- Q: How do you measure cross-channel incrementality effects? A: Use factorial experimental designs where different user groups see different channel combinations. This reveals both individual channel incrementality and interaction effects.

- Q: Can machine learning models predict incrementality without running tests? A: ML models can predict incrementality for similar audiences/campaigns based on historical test results, but periodic testing is still needed to validate predictions and account for market changes.

- Q: How do you adjust incrementality measurement for competitive effects? A: Monitor competitor activity during test periods and use difference-in-differences analysis to separate your campaign effects from market-wide changes caused by competitor actions.

- Q: What's the role of media mix modeling (MMM) in incrementality measurement? A: MMM provides top-down incrementality insights across all channels over time, while campaign-level incrementality testing provides bottom-up validation and optimization opportunities.

- Q: How do you measure incrementality in omnichannel campaigns that span online and offline? A: Use geographic testing with regions receiving different channel combinations, measure lift in both online metrics (app installs, purchases) and offline metrics (store visits, in-store sales).

- Q: What statistical methods are best for incrementality analysis? A: Difference-in-differences for temporal controls, synthetic control methods for complex baselines, and randomized controlled trials for cleanest measurement. Choice depends on your test design constraints.

Platform and Tool-Specific Questions

- Q: Does Google Ads' data-driven attribution approximate incrementality? A: Data-driven attribution better approximates incrementality than last-click, but it still credits touchpoints for conversions that would have happened anyway. Use Google's lift studies for true incrementality measurement.

- Q: How does Apple's SKAdNetwork enable incrementality measurement for iOS apps? A: SKAdNetwork's conversion values can be configured to measure incremental behaviors (time-to-conversion, engagement depth) rather than just conversion volume, providing incrementality insights within privacy constraints.

- Q: Can you run incrementality tests using TikTok's advertising platform? A: TikTok offers Brand Lift studies for awareness metrics and is developing conversion lift capabilities. For now, use geographic or audience-based holdouts within TikTok's campaign management.

- Q: How do incrementality insights integrate with customer data platforms (CDPs)? A: CDPs can segment users based on incrementality scores, enabling personalized experiences for high-incrementality audiences while reducing spend on low-incrementality segments.

- Q: Does Amazon's advertising platform support incrementality measurement? A: Amazon offers Brand Lift studies and is developing conversion lift capabilities for app advertisers. Use Amazon's DSP for geographic holdout testing across Amazon's advertising inventory.

Privacy and Compliance

- Q: How does GDPR compliance affect incrementality testing in Europe? A: GDPR requires explicit consent for data processing, which can create selection bias in incrementality tests. Use aggregated testing methods and ensure control groups are formed without processing personal data.

- Q: Can you run incrementality tests without collecting personal user data? A: Yes, through geographic testing, temporal controls, and aggregated measurement approaches that measure lift without tracking individual users. These methods actually provide more reliable results.

- Q: How will Google's Privacy Sandbox affect incrementality measurement? A: Privacy Sandbox's Attribution Reporting API is designed specifically for incrementality measurement, providing aggregated conversion lift insights while protecting individual user privacy.

- Q: What's the impact of iOS 15's Mail Privacy Protection on email incrementality testing? A: MPP affects email open tracking but not click-through or conversion measurement. Focus incrementality testing on downstream actions (app opens, purchases) rather than email engagement metrics.

Budget and ROI Considerations

- Q: What percentage of UA budget should be allocated to incrementality testing? A: Start with 10-15% of budget for testing, expanding to 25-30% as you build confidence and capabilities. The learning value typically justifies the temporary performance impact.

- Q: How do you calculate ROI of incrementality testing itself? A: ROI = (Budget Savings from Eliminating Non-Incremental Spend) / (Cost of Testing + Opportunity Cost of Holdouts). Most teams see 300-500% ROI within the first quarter.

- Q: What's the minimum budget needed to run meaningful incrementality tests? A: Geographic holdouts can work with budgets as low as $2,500/month. Audience holdouts need higher budgets ($10,000+/month) for statistical significance.

- Q: How do you justify incrementality testing budgets to finance teams? A: Frame it as budget optimization, not testing cost. Show the potential savings from eliminating non-incremental spend (typically 20-40% of current budgets) vs. the small cost of testing (5-10% of budgets).

Future Trends and Innovation

- Q: How will AI change incrementality measurement in the next 5 years? A: AI will enable real-time incrementality prediction, automated test design, and continuous optimization based on predicted incremental value rather than attributed conversions.

- Q: What role will incrementality play in Web3 and metaverse marketing? A: Web3's community-driven discovery and token-based incentives create new baseline behaviors that traditional incrementality models don't account for. New measurement approaches will be needed.

- Q: How might quantum computing affect incrementality measurement? A: Quantum computing could enable more sophisticated statistical modeling and privacy-preserving computation, allowing for complex incrementality analysis while maintaining individual user privacy.

- Q: Will incrementality measurement replace attribution entirely? A: Incrementality and attribution serve different purposes. Attribution tracks the user journey; incrementality measures whether marketing changed behavior. The future likely involves integrated approaches using both.

This comprehensive FAQ addresses the most common questions about incrementality measurement in mobile UA, providing practical answers that teams can implement immediately while building toward more sophisticated measurement capabilities.

Reference

[1] Apple — AppTrackingTransparency (framework overview) — Apple Developer Documentation — Ongoing doc — https://developer.apple.com/documentation/apptrackingtransparency — Accessed 2025-09-28.

[2] Apple — SKAdNetwork + updateConversionValue(_:) — Apple Developer Documentation — Ongoing doc — https://developer.apple.com/documentation/storekit/skadnetwork/ ; https://developer.apple.com/documentation/storekit/skadnetwork/updateconversionvalue%28_%3A%29 — Accessed 2025-09-28.

[3] WIRED — Why iOS 14.5 is Apple’s biggest privacy update yet — 2021-04 — https://www.wired.com/story/ios-14-5-update-app-tracking — Accessed 2025-09-28.

[4] Google — Attribution Reporting API (Android) Developer Guide — 2025-07-03 — https://privacysandbox.google.com/private-advertising/attribution-reporting/android/developer-guide — Accessed 2025-09-28.

[5] Google — Attribution Reporting API (Web) Overview — 2025-03-14 — https://privacysandbox.google.com/private-advertising/attribution-reporting — Accessed 2025-09-28.

[6] MDN — Attribution Reporting API — Web APIs — 2025-07-24 — https://developer.mozilla.org/en-US/docs/Web/API/Attribution_Reporting_API — Accessed 2025-09-28.

[7] Gordon et al. — Ghost Ads / Conversion Lift methodology (overview via Meta Help) — Meta Business Help Center — n.d. — https://www.facebook.com/business/help/221353413010930 — Accessed 2025-09-28.

[8] The Trade Desk — Best practices for better conversion lift — 2023-08-23 — https://www.thetradedesk.com/resources/best-practices-for-better-conversion-lift — Accessed 2025-09-28.

[9] Google Ads Help — About Conversion Lift — n.d. — https://support.google.com/google-ads/answer/12003020— Accessed 2025-09-28.

[10] AppsFlyer — Incrementality for remarketing (Help Center) / Product page — 2025-06-03 & n.d. — https://support.appsflyer.com/hc/en-us/articles/360009360978 ; https://www.appsflyer.com/products/incrementality/ — Accessed 2025-09-28.

[11] Adjust — Incrementality (solution overview) — n.d. — https://www.adjust.com/solutions/incrementality/ — Accessed 2025-09-28.

[12] Branch — How to measure incrementality (guide) — n.d. — https://www.branch.io/guides/how-to-measure-incrementality/ — Accessed 2025-09-28.

[13] TikTok — About TikTok Brand Lift Studies (Help Center) — 2025-04 — https://ads.tiktok.com/help/article/tiktok-brand-lift-studies — Accessed 2025-09-28.

[14] TikTok — Conversion Lift Study: measure true impact (blog) — 2024-10-07 — https://ads.tiktok.com/business/en-US/blog/conversion-lift-study — Accessed 2025-09-28.

[15] Think with Google — Incrementality testing (guidance) — 2023-12 — https://www.thinkwithgoogle.com/intl/en-emea/marketing-strategies/data-and-measurement/incrementality-testing/ — Accessed 2025-09-28.

[16] Google Ads & Commerce Blog — Meridian is now available to everyone — 2025-01-29 — https://blog.google/products/ads-commerce/meridian-marketing-mix-model-open-to-everyone/ — Accessed 2025-09-28.

[17] Google Developers — Meridian (product hub) — 2025 — https://developers.google.com/meridian — Accessed 2025-09-28.

[18] GitHub — google/meridian — 2025 — https://github.com/google/meridian — Accessed 2025-09-28.

[19] Google Developers — About the Meridian project — 2025 — https://developers.google.com/meridian/docs/basics/about-the-project — Accessed 2025-09-28.

[20] Incrementality Test: Measuring Online Advertising Effectiveness — 2025-01-31 — https://tengyuan.substack.com/p/incrementality-test-measuring-online Accessed 2025-09-28.