5 min read

However, with the increasing adoption of artificial intelligence (AI) in digital marketing, such technology will not only help marketers better target their ideal audience, it might just be the cure for protecting advertiser dollars.

Programmatic Could Compromise Your Brand Safety

Brand safety came under the spotlight following a number of advertising mishaps in early 2017. Alexi Mostrous from The Times revealed that many household brands were unwittingly supporting terrorism on YouTube by placing their ads on hate and Islamic state videos. Later the same year, ads from some of the world’s biggest brands were seen to be running alongside videos that sexually exploited children. This led to widespread panic, with many brands pulling programmatic spend until they could be assured by publishers like Google that measures were being taken to filter out such content.

In 2018, brand safety has broadened to cover any offensive, illegal or inappropriate content that appears next to a brand’s assets, thus threatening its reputation and image. This could include controversial news stories or opinion pieces, as well as fake news or content that is not aligned with a brand’s values – for example, a fast food company’s ad appearing next to an article about heart disease.

In a recent survey, 72 percent of marketers stated that they were concerned about brand safety when it came to programmatic. Also, more than a quarter of respondents claimed that their ads had at some point been displayed alongside controversial content.

Why are brands running scared? Because this definitely has an impact on consumer psyche. Nearly half of consumers are unequivocal about boycotting products that advertise alongside offensive content, and an additional 38 percent report a loss of trust in such brands.

To be fair to brands, they are not choosing to support such content.

Traditional Techniques Come With Limitations

Brands understand the damage that offensive content could cause their image, but it is not feasible for them to implement customized brand safety measures across each ad placement.

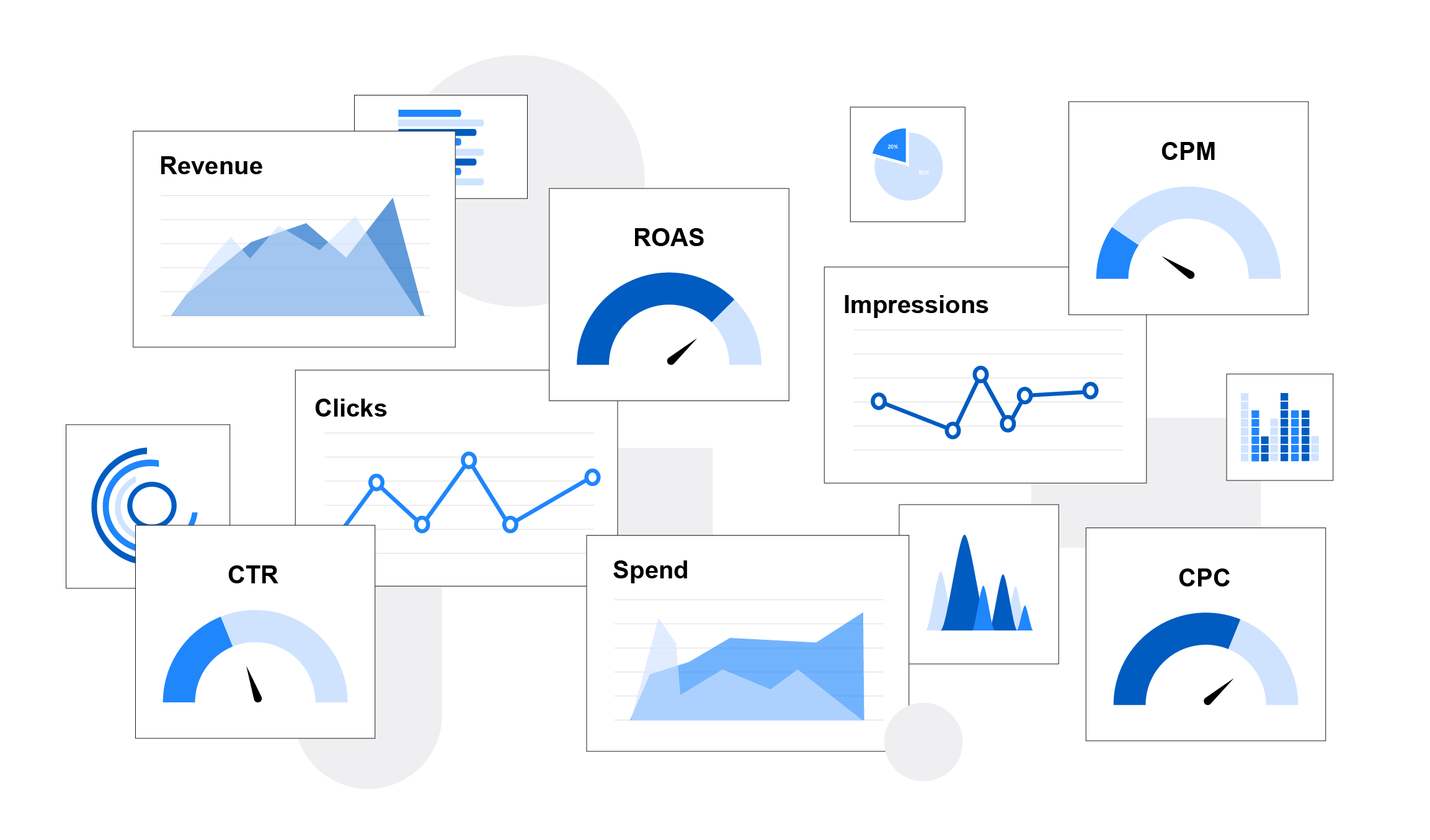

Digital advertisers do not have direct relationships with publishers. Ad exchanges receive inventories from thousands of websites that are auctioned off within milliseconds, on the basis of demographics, domain and size of ad. Hence, there are no checks for context or appropriateness, only audience relevance.

While the explosion of programmatic may be offering more opportunities to reach the right audience, the sheer volume also makes it difficult to monitor.

Of course, there are some topic areas that no brand will advertise around – terrorism, pornography, violence, etc. And brands can stay away from content around these by using blacklists, whitelists and keyword searching. However, these have their own limitations.

A blacklist, for example, details individual words that a brand does not want to be associated with – but this ignores nuances and context, letting some ads slip through the net, or blocking placements that may, in fact, be safe.

Finally, safety is subjective. A washing machine brand will have nothing to lose by advertising next to content on prevention of tooth decay, but this could be problematic for a biscuit or chocolate brand.

In the long term, such techniques that are nuance-agnostic cannot completely assure brand safety. Neither can manual methods and checks keep up with the volume, scale and speed that are characteristic of programmatic today.

AI Introduces Context to Content

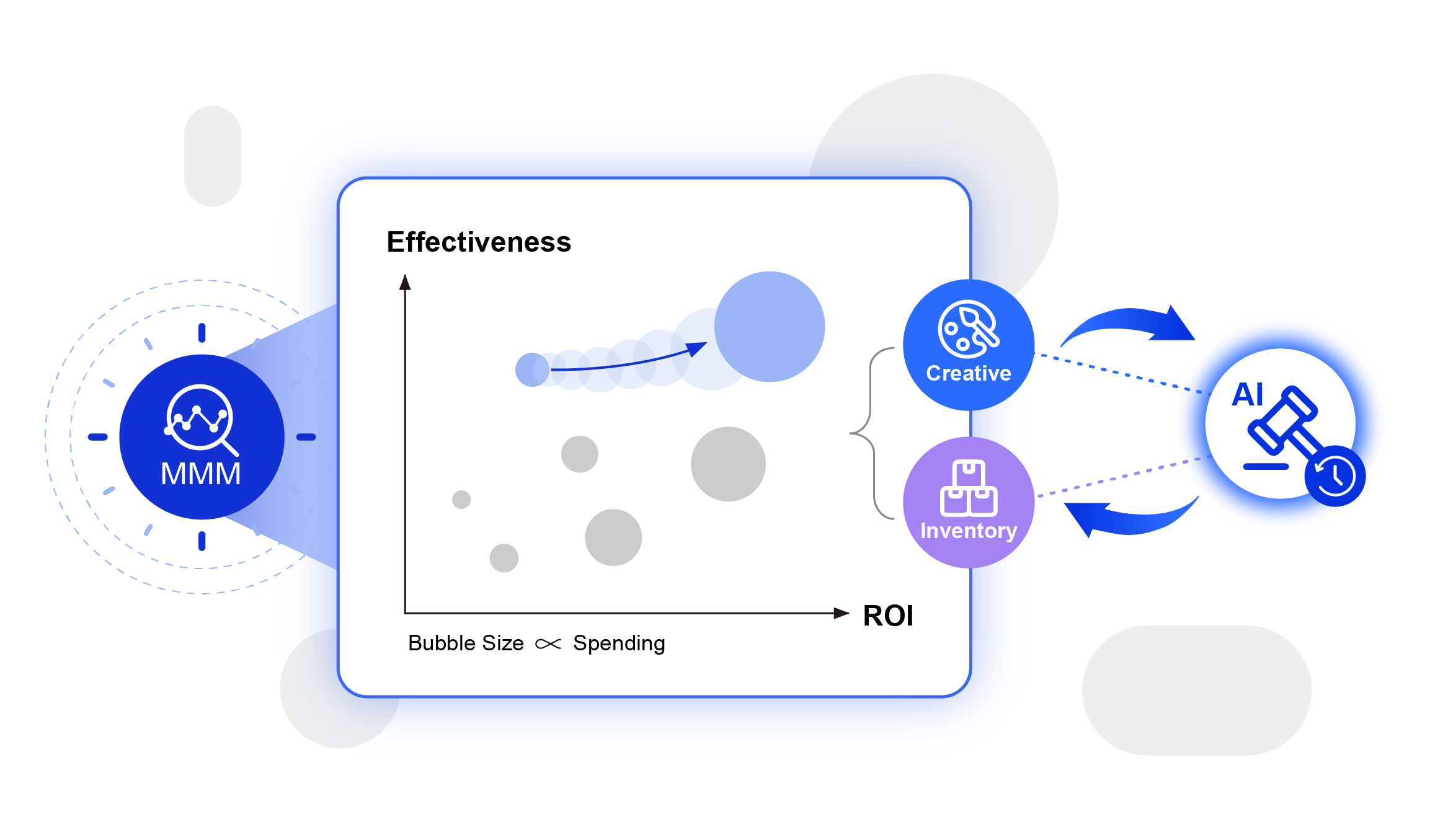

In this context, artificial intelligence, which offers a solution through algorithms that can understand nuance and context, is fast becoming an answer to marketers’ brand safety woes.

Although AI solutions might not be able to eliminate false positives or avoid the damage entirely, such solutions, specifically those that use machine learning (ML), natural language processing (NLP) and semantic analysis, can offer the nuanced contextualisation that programmatic is lacking today.

ML can ‘learn’ how people approve or blacklist content and then use this to automatically deem content as appropriate or offensive. NLP and semantic analysis assess brand safety at a granular level by understanding the context of a page rather than only look at the keywords or domain name.

Using AI tools that can process large volumes of data at speed to analyze inappropriate placements, advertisers can benefit from the scale and targeting efficiency of programmatic, while avoiding potentially damaging ad placements. Simultaneously, AI can also unlock the potential that false positives undermine by recommending content that brands would otherwise be blind to.

Post the YouTube-debacle, Google confirmed that it was using AI to make YouTube content safe for brands, stating that using ML allowed it to flag offensive content more efficiently and faster than manual methods.

Also, when it comes to brand safety, post-campaign analysis will simply not cut it. Brands have to combine programmatic tools with AI to ensure that the ad placements they are bidding for do not contain content inappropriate to the brand message.

Last but not least, brands should take note that AI tools are only as good as the rules that drive them. Hence, brands must first understand safety within their own context, and what they deem appropriate or offensive. Brand safety rules need to be re-examined periodically as context evolves so they cannot completely do away with human intervention, but AI can help to deal with the sheer volume and scale at which brands operate today.